http://jenetics.io/ http://casser.io/medical-nlp/

Computational intelligence is rooted in the artificial neural network and evolutionary algorithms. No free lunch theorem implies that searching for the 'best' general purpose black box optimization algorithm is irresponsible as no such procedure is theoretically possible.

| Swarm intelligence based algorithms | Bio-inspired (not SI-based) algorithms | Physics and Chemistry based algorithms | Others |

|---|---|---|---|

| Ant Colony Optimization | Genetic Algorithm | Simulated Annealing | Fuzzy Intelligence |

| Particle Swarm Optimization | Differential Evolution | Gravitational Search | Tabu Search |

| More | More | More | More |

See the article A Brief Review of Nature-Inspired Algorithms for Optimization for more nature-inspired optimization algorithms or Clever Algorithms: Nature-Inspired Programming Recipes for nature-inspired AI.

| Computational Intelligence |

|---|

|

In IEEE Computational Intelligence Society, computational intelligence is introduced as follows:

Computational Intelligence (CI) is the theory, design, application and development of biologically and linguistically motivated computational paradigms. Traditionally the three main pillars of CI have been Neural Networks, Fuzzy Systems and Evolutionary Computation. However, in time many nature inspired computing paradigms have evolved. Thus CI is an evolving field and at present in addition to the three main constituents, it encompasses computing paradigms like ambient intelligence, artificial life, cultural learning, artificial endocrine networks, social reasoning, and artificial hormone networks. CI plays a major role in developing successful intelligent systems, including games and cognitive developmental systems. Over the last few years there has been an explosion of research on Deep Learning, in particular deep convolutional neural networks. Nowadays, deep learning has become the core method for artificial intelligence. In fact, some of the most successful AI systems are based on CI.

- https://www.tecweb.org/styles/gardner.html

- http://www.mae.cuhk.edu.hk/~cil/index.htm

- http://cogprints.org/

- http://ai.berkeley.edu/lecture_videos.html

- http://www.comp.hkbu.edu.hk/~hknnc/index.php

- https://www.cleverism.com/artificial-intelligence-complete-guide/

- https://www.eurekalert.org/pub_releases/2018-03/uom-csw032918.php

- Computational Intelligence: An Introduction

Some packages:

- https://github.com/facebookresearch/nevergrad

- https://github.com/DEAP/deap

- https://github.com/SioKCronin/swarmopt

- https://cilib.net/

- http://fuzzy.cs.ovgu.de/nefcon/

Some courses:

- http://www.cs.armstrong.edu/saad/csci8100/

- http://www.cis.pku.edu.cn/faculty/system/tanying/CI/CIlecture1.pdf

- https://people.eecs.berkeley.edu/~russell/papers/mitecs-computational-intelligence.pdf

- http://www.soft-computing.de/def.html

- http://mat.uab.cat/~alseda/MasterOpt/index.html

Optimization is to minimize the cost or maximize the utility. Particularly, we call it as numerical optimization if the cost or utility can be formulated in mathematical form. Some general principles are written in the form of optimization such as Maximum Entropy Principle. It raises in operation research, numerical analysis, computational mathematics and more technological applications such as the engine design. It is clear that optimization relies on at least perspectives the objective to optimize and the approach to optimize the objective with or without some constraints.

Another topic on computational intelligence is to search some object or goals in complex and uncertain system. Optimization is to search the best or optimal configuration in some sense. Search is to explore the system with some fuzzy goals.

Evolution or adaptation is in the level of population, which leads to this diverse earth. And this is an important criteria which differs from other entities. It is related with transfer learning. Like in ecosystem, what the computational learners can do if the system changes a lot in order to survive? In numerical optimization, if the objective function changes, we may consider different optimization methods.

Swarm intelligence is the study of computational systems inspired by the 'collective intelligence'. Collective Intelligence emerges through the cooperation of large numbers of homogeneous agents in the environment. Swarm intelligence believes that scale matters. And agents in different scale are different: more agents, more power.

- Particle Swarm Optimization(PSO)

- Accelerated PSO

- Ant Colony Optimization

- Fish swarm/school

- http://www.scholarpedia.org/article/Swarm_intelligence

Particle Swarm Optimization(PSO)

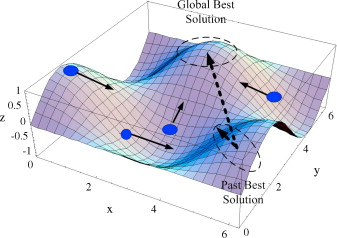

Individuals in a particle swarm follow a very simple behavior: to emulate the success of neighboring individuals and their own successes. The collective behavior that emerges from this simple behavior is that of discovering optimal regions of a high dimensional search space.

In simple terms, the particles are "flown" through a multidimensional search space, where the position

of each particle is adjusted according to its own experience and that of its neighbors.

The position of the particle is changed

by adding a velocity,

x_{i}(t+1) = x_{i}(t)+v_i(t+1)

with

Each particle’s velocity is updated using this

equation at time

$$ v_{i+1}(t)=\omega v_{i}(t)+c_1r_1[\hat{x}{i}(t)-x{i}(t)]+c_2r_2[g(t)-x_i(t)] $$ where the notations will explain in the following table:

| the inertial coefficient usually in |

acceleration coefficients in [0,2] | random values in [0,1] at each update | |||

| the particle’s velocity at time |

the particle’s individual best solution as of time${t}$ | the swarm’s best solution as of time |

Inertia Component

- Keeps the particle moving in the same direction it was originally heading.

- Lower values speed up convergence, higher values encourage exploring the search space.

Cognitive Component $c_1r_1[\hat{x}{i}(t)-x{i}(t)]$:

- Acts as the particle’s memory, causing it to return to its individual best regions of the search space;

- Cognitive coefficient

$c_1$ usually close to 2 - Coefficient limits the size of the step the particle takes toward its individual best

$\hat{x}_i(t)$ .

Social Component

- Causes the particle to move to the best regions the swarm has found so far;

- Social coefficient

$c_2$ usually close to 2; - Coefficient limits the size of the step the particle takes toward the global best

$g$ .

It does not compute the gradient of the function. And like accelerated gradient, it uses the previous iteration result.

- http://www.cs.armstrong.edu/saad/csci8100/pso_slides.pdf

- http://www.swarmintelligence.org/tutorials.php

- https://github.com/tisimst/pyswarm

- http://mnemstudio.org/particle-swarm-introduction.htm

- https://arxiv.org/ftp/arxiv/papers/1804/1804.05319.pdf

Ant Colony Optimization

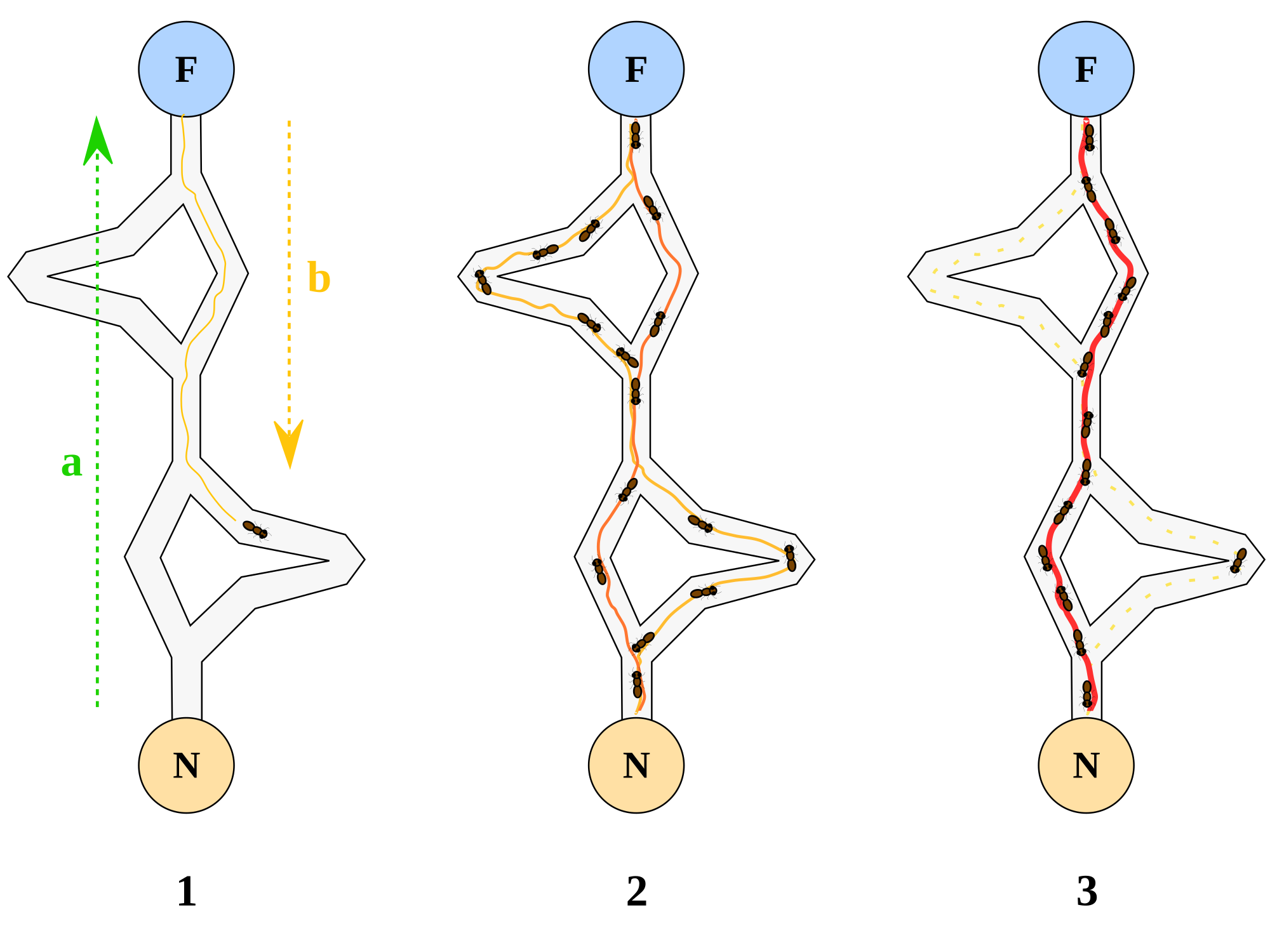

It is an meta-heuristic optimization for searching for optimal path in the graph based on behaviour of ants seeking a path between their colony and source of food.

Overview of the System:

- Virtual trail accumulated on path segments;

- Path selected at random based on amount of "trail" present on possible paths from starting node ;

- Ant reaches next node, selects next path;

- Continues until reaches starting node;

- Finished tour is a solution;

- Tour is analyzed for optimality.

An ant will move from node i to node j with probability

$$

p_{ij} = \frac{(\tau_{ij}^{\alpha})(\eta_{ij}^{\beta})}{\sum (\tau_{ij}^{\alpha})(\eta_{ij}^{\beta})}

$$

where

Amount of pheromone is updated according to the equation

$$

\tau_{ij} = (1-\rho)\tau_{ij} +\Delta \tau_{ij}

$$

where

The three most successful special cases of the ACO metaheuristic are: Ant System, Ant Colony System (ACS), and MAX-MIN Ant System (MMAS).

Ant System

Pheromone values are updated by all the ants that have completed the tour:

Ant Colony System

Ants in ACS use the pseudorandom proportional rule:

- Probability for an ant to move from city i to city j depends on a random variable

${q}$ uniformly distributed over [0, 1], and a parameter$q_0$ . - If

$q\leq q_0$ , then, among the feasible components, the component that maximizes the product$\tau_{il}\eta_{il}^{\beta}$ is chosen, otherwise the same equation as in Ant System is used. - This rule favours exploitation of pheromone information.

Each ant applies it only to the last edge traversed

where

- http://mat.uab.cat/~alseda/MasterOpt/ACO_Intro.pdf

- https://www.ics.uci.edu/~welling/teaching/271fall09/antcolonyopt.pdf

- http://www.aco-metaheuristic.org/

- http://mathworld.wolfram.com/AntColonyAlgorithm.html

AFSA (artificial fish-swarm algorithm)

Let

where

- https://link.springer.com/article/10.1007/s10462-012-9342-2

- http://cloudbus.org/~adel/pdf/AIR2014.pdf

- http://www.mecs-press.org/ijisa/ijisa-v2-n1/IJISA-V2-N1-6.pdf

- http://www.scielo.br/pdf/lajss/v11n1/09.pdf

Stochastic Diffusion Search(SDS)

In contrast to many nature-inspired algorithms, SDS has a strong mathematical framework describing its behaviour and convergence.

Stochastic Diffusion Search (SDS) was first proposed by Bishop (1989) as a population based best-fit pattern matching algorithm. Such algorithms can also be recast in terms of an optimisation by defining the objective function,

In general, SDS can most easily be applied to optimisation problems where the objective function is decomposable into components that can be evaluated independently. In order to locate the optima of a given objective function SDS employs a swarm of n agents, each of which maintains a hypothesis,

- http://www.scholarpedia.org/article/Stochastic_diffusion_search

- http://www.doc.gold.ac.uk/~mas02gw/MSCI11/2010/1/SDS_Review_27%20Sep%202010.pdf

- http://trac.mystic.cacr.caltech.edu/project/mystic/wiki.html

In machine learning, it is always thought in the representation + evaluation + optimization way. For specific tasks, we represent the conditions or inputs in binary code so that the computer can handle them; then a family of mathematical model is choose to transform and process the inputs. And an evaluation as a performance metric is used to show how good a model is such as the loss function in supervised machine learning. An optimization method is used to find the best model with respect to the specified evaluation. Usually these are not dynamics.

However, it is not the fact in nature. It is not necessary to compute the gradient or the expected regards before making any decision in our brain. See the differences between the machine learning and biological learning in their websites: Machine Learning Group and Biological Learning Group.

In this section, we will focus on the following topics.

- Differential evolution

- Genetic Algorithms

- Biogeography-based optimization

- Neuroscience-Inspired Artificial Intelligence

Terrence J. Sejnowski in The Deep Learning Revolution asserts that

The only existence proof that any of the hard problems in artificial intelligence can be solved is the fact that, through evolution, nature has already solved them.

However, the evolution itself is not clear for us since the Darwin's Theory Of Evolution was proposed. Natural selection is really based on the principles of physics and chemistry, but not all animals live in the world understand physics or chemistry. From different levels and perspectives, we would research the adaptiveness, intelligence and evolution.

Deep learning is in the level of networks. Artificial Immune System is in the level of systems.

Evolutionary Computation(EC)

Evolutionary computation: is likely to be the next major transition of artificial intelligence. In EC, core concepts from evolutionary biology — inheritance, random variation, and selection — are harnessed in algorithms that are applied to complex computational problems. The field of EC, whose origins can be traced back to the 1950s and 60s, has come into its own over the past decade. EC techniques have been shown to solve numerous difficult problems from widely diverse domains, in particular producing human-competitive machine intelligence. EC presents many important benefits over popular deep learning methods includes a far lesser extent on the existence of a known or discoverable gradient within the search space.

- https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5534026/

- Evolutionary Computation,

- Introduction to Evolutionary Computing by A.E. Eiben and J.E. Smith,

- Understanding Evolution

- https://github.com/lmarti/evolutionary-computation-course

- http://lmarti.com/aec-2014

Differential Evolution(DE)

Differential evolution is capable of handling nondifferentiable, nonlinear and multimodal objective functions. It has been used to train neural networks having real and constrained integer weights.

In a population of potential solutions within an n-dimensional search space, a fixed number of vectors are randomly initialized, then evolved over time to explore the search space and to locate the minima of the objective function.

At each iteration, called a generation, new vectors are generated by the combination of vectors randomly chosen from the current population (mutation). The outcoming vectors are then mixed with a predetermined target vector. This operation is called recombination and produces the trial vector. Finally, the trial vector is accepted for the next generation if and only if it yields a reduction in the value of the objective function. This last operator is referred to as a selection. Adopted from MathWorld.

- DE

- Differential Evolution (DE) for Continuous Function Optimization (an algorithm by Kenneth Price and Rainer Storn)

Genetic Algorithm

Genetic Algorithms (GAs) mimic natural selection in order to solve several types of problems, including function optimization, scheduling, and combinatorial problems. To do this, the GA maintains and "evolves" over time a population of individual solutions to the problem at hand. Each generation of individual solutions (hopefully) becomes better and better, until some individual in the population represents an acceptable solution. Two of the most important characteristics of GAs are the representation used and the genetic operators employed.

In GAs, representation is how we encode solutions to a problem. For example, individuals in the population of a GA may be simple binary strings, or they may be a series of integers or floating point numbers in some specified range. Aside from representation, another facet that may affect GA performance is the set of genetic operators used. Genetic operators are the means by which the population evolves towards a solution. In a simple GA, these operators may be classified as crossover, mutation, and selection operators.

- Genetic Algorithms.

- http://www.genetic-programming.com/gecco2004tutorial.pdf

- http://mathworld.wolfram.com/GeneticAlgorithm.html

- http://mat.uab.cat/~alseda/MasterOpt/Dziedzic.GA_intro.pdf

- http://mat.uab.cat/~alseda/MasterOpt/Beasley93GA1.pdf

- http://mat.uab.cat/~alseda/MasterOpt/Beasley93GA2.pdf

Artificial Immune Sysytems

Artificial immune systems (AISs) are inspired by biological immune systems and mimic these by means of computer simulations. They are seen with interest from immunologists as well as engineers. Immunologists hope to gain a deeper understanding of the mechanisms at work in biological immune systems. Engineers hope that these nature-inspired systems prove useful in very difficult computational tasks, ranging from applications in intrusion-detection systems to general optimization. Moreover, computer scientists identified artificial immune systems as another example of a nature-inspired randomized search heuristic (like evolutionary algorithms, ant colony optimization, particle swarm optimization, simulated annealing, and others) and aim at understanding their potential and limitations. While the relatively new field has its successful applications and much potential its theoretical foundation is still in its infancy. Currently there are several not well connected strands within AIS theory, not even a general agreement on what the central open problems are, and only a weak connection between AIS theory and AIS applications. The main goals of the proposed seminar include bringing together computer scientists and engineers to strengthen the connections within AIS theory, connections to other researchers working on the theory of randomized search heuristics, and to improve connectivity between AIS theory and applications. Adopted from Artificial Immune Systems seminar

- http://www.artificial-immune-systems.org/

- https://www.sciencedirect.com/science/article/pii/S1568494610002723

Neuroscience-Inspired Artificial Intelligence

| AI and Beyond |

|---|

|

- Neuroscience-Inspired Artificial Intelligence

- Computational Perception & Cognition

- https://www.cbicr.tsinghua.edu.cn/

- https://blog.csdn.net/u013088062/article/details/50489674

- https://zhuanlan.zhihu.com/p/23965227

- https://zhuanlan.zhihu.com/p/30190719

- https://zhuanlan.zhihu.com/p/20726556

- https://zhuanlan.zhihu.com/p/23782226

- https://zhuanlan.zhihu.com/p/23804250

- https://zhuanlan.zhihu.com/p/23979871

- https://www.zhihu.com/question/59408117/answer/164972455

- http://www.psy.vanderbilt.edu/courses/hon1850/Brain_Structure_Function_Chapter.pdf

- Simulated annealing

- Gravitational search

- Stochastic diffusion search

Simulated Annealing(SA)

Simulated Annealing (SA) is an effective and general form of optimization. It is useful in finding global optima in the presence of large numbers of local optima. "Annealing" refers to an analogy with thermodynamics, specifically with the way that metals cool and anneal. Simulated annealing uses the objective function of an optimization problem instead of the energy of a material.

Implementation of SA is surprisingly simple. The algorithm is basically hill-climbing except instead of picking the best move, it picks a random move. If the selected move improves the solution, then it is always accepted. Otherwise, the algorithm makes the move anyway with some probability less than 1. The probability decreases exponentially with the "badness" of the move, which is the amount

- http://www.theprojectspot.com/tutorial-post/simulated-annealing-algorithm-for-beginners/6

- http://mathworld.wolfram.com/SimulatedAnnealing.html

- http://www.cs.cmu.edu/afs/cs.cmu.edu/project/learn-43/lib/photoz/.g/web/glossary/anneal.html

- http://120.52.51.16/web.mit.edu/dbertsim/www/papers/Optimization/Simulated%20annealing

- http://mat.uab.cat/~alseda/MasterOpt/ComprehensiveSimulatedAnnealing.pdf

Gravitational Search Algorithms(GSA)

Gravitational Search Algorithms (GSA) are heuristic optimization evolutionary algorithms based on Newton's law of universal gravitation and mass interactions. GSAs are among the most recently introduced techniques that are not yet heavily explored. They are adapted to the cell placement problem, and it is shown its efficiency in producing high quality solutions in reasonable time. Adopted from On The Performance of the Gravitational Search Algorithm

- http://home.ijasca.com/data/documents/ijasc08_published.pdf

- https://www.sciencedirect.com/science/article/pii/S0020025509001200

Fireworks Algorithm

- http://www.cil.pku.edu.cn/research/fwa/publication/IntroducetoFireworksAlgorithm.pdf

- http://adsabs.harvard.edu/abs/2018EnOp...50.1829G

- https://msdn.microsoft.com/en-us/magazine/dn857364.aspx

- Fuzzy Intelligence

- Tabu Search

- Differential search algorithm

- Backtracking optimization search

- https://readyforai.com/

- http://cleveralgorithms.com/

- https://course.elementsofai.com/

- https://intelligence.org/blog/

- https://github.com/Siyeong-Lee/CIO

- https://github.com/owainlewis/awesome-artificial-intelligence

- https://github.com/JordanMicahBennett/Supermathematics-and-Artificial-General-Intelligence

- https://github.com/gopala-kr/AI-Learning-Roadmap

- http://emergentcomputation.com/Pseudo.html

- https://wiki.opencog.org/w/Special:WhatLinksHere/The_Open_Cognition_Project

- 人工智能(AI)资料大全 - 猿助猿的文章 - 知乎

- Zeroth-Order Method for Distributed Optimization With Approximate Projections

- Derivative-Free Optimization (DFO)

- Derivative Free Optimization / Optimisation sans Gradient

- Delaunay-based derivative-free optimization via global surrogates, part I: linear constraints

- Delaunay-based derivative-free optimization via global surrogates, part II: convex constraints

- https://projects.coin-or.org/Dfo

- https://nlopt.readthedocs.io/en/latest/NLopt_Algorithms/

- http://adl.stanford.edu/aa222/Lecture_Notes_files/chapter6_gradfree.pdf

- https://code.fb.com/ai-research/nevergrad/

- https://github.com/facebookresearch/nevergrad

- https://www.kthohr.com/optimlib.html

- https://www.infoq.com/presentations/black-box-optimization