-

Notifications

You must be signed in to change notification settings - Fork 18.7k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

GPU 0 is also used when running on other GPUs (#440 reocurred?) #2186

Comments

|

Thanks for the report. We've seen this as well; a fix is forthcoming. |

|

Closing as fixed. |

|

@shelhamer thanks. so is the fix PR merged? |

|

It should be fixed, yes. Sorry, but I can't find the PR number at the moment. |

|

@shelhamer thanks. I just checked the RC5 version and it seems to be working! |

|

@sczhengyabin Have you fixed it ? I meet the same problem as you. Is the pythonlayer relative to the issue? |

|

@646677064 Examples: |

|

@646677064 @sczhengyabin |

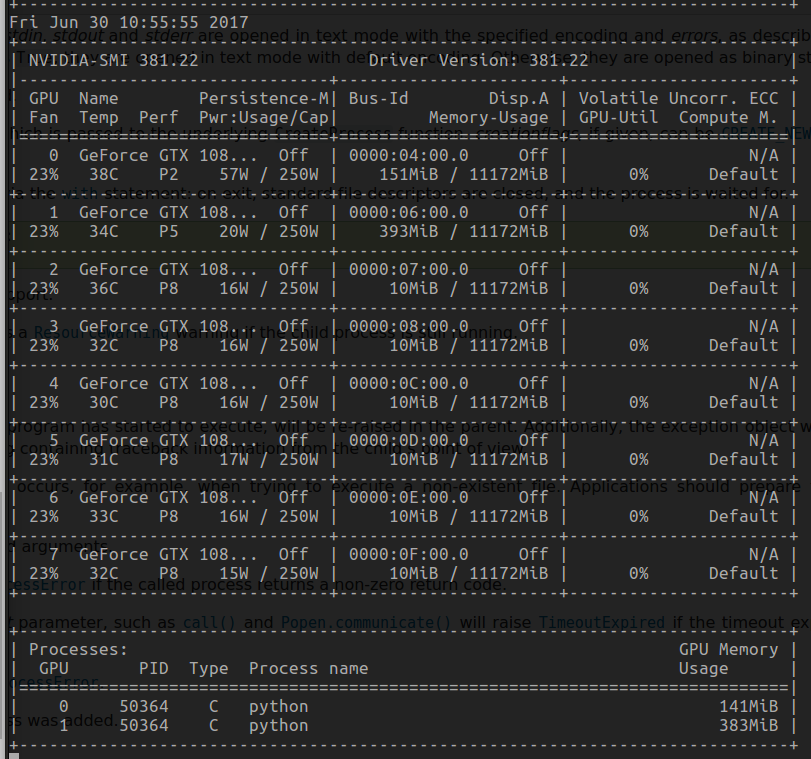

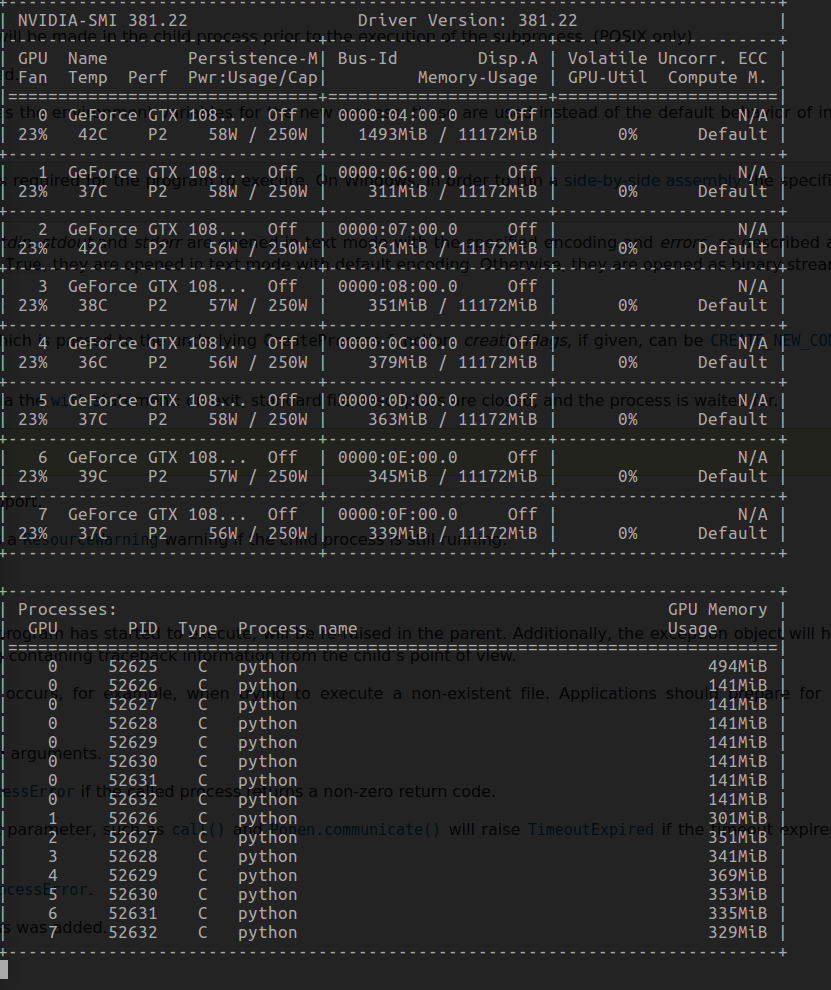

I just built caffe-rc2 with CUDA 7.0 and Driver 346.47. When running the test on my first GPU (with id 0), everything works fine. However, when running the test on 2nd GPU (with id 1, or

build/test/test_all.testbin 1), the commandnvidia-smishows that both GPUs are being used. This is not the case when I'm running the test on GPU0, nor when I'm running the test using caffe-rc1 (built with CUDA 6.5 a while ago). I tried building caffe-rc2 using CUDA 6.5, and the problem persists.By setting

export CUDA_VISIBLE_DEVICES=1, and runningbuild/test/test_all.testbin 0, the problem disappeared. So this seems like a problem like that in #440?Update: when I ran

build/test/test_all.testbin 1 --gtest_filter=DataLayerTest*, with my GPU0's memory filled up using some other software (cuda_memtest in my case), the program failed:With other test categories (at least

NeuronLayerTestandFlattenLayerTest), the program works fine.The text was updated successfully, but these errors were encountered: