-

Notifications

You must be signed in to change notification settings - Fork 35

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

bpf_redirect_map/bpf_redirect performance using generic xdp #60

Comments

|

Sorry, that diagram is not enough to explain what you're doing. Could you please list the traffic flow including all interfaces involved, how you generate the traffic, and which hooks are running which BPF programs? |

|

Sure, thx for the fast response. I'm using two interfaces veth0 and eno5. For the flow: Step 1: Generating traffic with trafgen using the following command: trafgen -i ./trafgen_1500 -o veth0 -b 1Gbit -P 1where "trafgen_1500" contains the following: {

eth(da="destination MAC address of the machine I'm sending to", sa="source MAC address of eno5",type=0x8100)

vlan(tci=2048,1q)

ipv6(da="destination IP of the machine I'm sending to", sa="source IP of eno5")

rnd(1442)

}Because the traffic contains a VLAN tag I disabled VLAN offloading with ethtool for the interface eno5. Step 2: Listening on egress packets on interface veth0 and redirecting the traffic towards the ingress direction of eno5. Using eBPF TC on the virtual interface "veth0": attached by: sudo tc qdisc add dev veth0 clsact

sudo tc filter add dev veth0 egress prio 1 handle 1 bpf da obj ./tc_redirect.o sec tc_redirectStep 3: Modifying (left out here because the issue also appeared without the modification) and redirecting the traffic on the physical interface "eno5" using the generic XDP mode. The redirect stays on the same interface "eno5": I hope that clears some of the questions. |

|

JustusvonderBeek <notifications@github.com> writes:

I hope that clears some of the questions.

Yeah, it helps with understanding *what* you're doing. What's left is

why would you do something like this? :)

And no I don't have any good ideas for why you're seeing packet drops.

Something about CPU affinity, perhaps, or maybe the packet generator is

not generating complete packets (checksum error?).

Have you identified where the drops happen? If you put counters into the

BPF programs you should be able to see which redirect is failing...

|

I thought I could already write XDP code for the case when the XDP egress hook point gets ready. :) I tested the redirects with counters and found that I receive all packets until the interface eno5. After the second redirect from eno5 towards egress they get dropped.

Is there a way to pin the execution on one specific CPU?

Regarding the packet generator part, this would mean the packets would be dropped by the kernel on the receiving machine, right? Because this is not the case. |

|

JustusvonderBeek <notifications@github.com> writes:

> Yeah, it helps with understanding *what* you're doing. What's left is

> why would you do something like this? :)

I thought I could already write XDP code for the case when the XDP

egress hook point gets ready. :)

It's possible to write BPF code that you can use on both the TC and XDP

hooks; see this example:

https://github.com/xdp-project/bpf-examples/tree/master/encap-forward

I would recommend that over this convoluted redirect scheme :)

What's your application? If you're only targeting forwarded traffic

(i.e., that goes through XDP_REDIRECT), there's already a hook in the

devmap that is per map entry (which for redirected traffic semantically

corresponds to a TX hook, just slightly earlier in the call chain).

I tested the redirects with counters and found that I receive all

packets until the interface eno5. After the second redirect from eno5

towards egress they get dropped.

Right, figured that would be the most likely place. So apart from the

CPU or checksum issues I already mentioned, another possible reason is

simply that the hardware is overwhelmed. XDP_REDIRECT bypasses the qdisc

layer, so there's no buffering if the hardware can't keep up. So if the

traffic generator is bursty I wouldn't be surprised if it could

overwhelm the hardware...

> Something about CPU affinity, perhaps,

Is there a way to pin the execution on one specific CPU?

I *think* that when traffic comes from a userspace application it'll

just stay on the CPU that the application is running on; s any standard

mechanism to pin your workload ought to work.

> or maybe the packet generator is not generating complete packets (checksum error?).

Regarding the packet generator part, this would mean the packets would

be dropped by the kernel on the receiving machine, right? Because this

is not the case.

Not necessarily. There could be a check in the driver or hardware. Have

you looks at the ethtool counters? (ethtool -S)?

|

I'm not sure if I understand the example correctly but you probably mean the "encap.h" file used in both the TC and XDP implementation right? I guess I will give it a try then.

Yes, the XDP program should handle forwarded traffic. But I don't understand how the hook in the devmap is supposed to work?

I also thought about the fact that the network device cannot keep up with the speed or the copying takes too long. But I tested the same setup without limiting the traffic generator in throughput. This generates around 4Gbit/s of 1500B packets and results in around 1Gbit/s of packets on eno5. So the speed can be achieved, but somewhere in my admittedly confusing setup I lose / drop around 70% of the packets.

So on the receiving machine I do see all packets that are seen by the interface eno5. That includes the counters from ethtool -S. I also checked dropwatch again and now it is spitting out: <num> drops at generic_xdp_tx+f1So that seems to make sense but the question now is why? :) |

|

JustusvonderBeek <notifications@github.com> writes:

> It's possible to write BPF code that you can use on both the TC and

> XDP hooks; see this example:

> https://github.com/xdp-project/bpf-examples/tree/master/encap-forward

I'm not sure if I understand the example correctly but you probably

mean the "encap.h" file used in both the TC and XDP implementation

right? I guess I will give it a try then.

Yup, exactly!

> What's your application? If you're only targeting forwarded traffic

> (i.e., that goes through XDP_REDIRECT), there's already a hook in the

> devmap that is per map entry (which for redirected traffic

> semantically corresponds to a TX hook, just slightly earlier in the

> call chain).

Yes, the XDP program should handle forwarded traffic. But I don't

understand how the hook in the devmap is supposed to work?

The idea is that instead of populating a devmap entry with just an

ifindex, you make the value an instance of struct bpf_devmap_val:

struct bpf_devmap_val {

__u32 ifindex; /* device index */

union {

int fd; /* prog fd on map write */

__u32 id; /* prog id on map read */

} bpf_prog;

};

so you put in both an index and an fd pointing to an XDP program (with

expected_attach_type of BPF_XDP_DEVMAP). Then, when you call

bpf_redirect_map() on ingress, that second devmap program will be

executed after (or during) the redirect; so semantically it is tied to

the destination ifindex, so you can do things like rewrite MAC addresses

based on the egress port, etc.

>> I tested the redirects with counters and found that I receive all packets until the interface eno5. After the second redirect from eno5 towards egress they get dropped.

> Right, figured that would be the most likely place. So apart from the CPU or checksum issues I already mentioned, another possible reason is simply that the hardware is overwhelmed. XDP_REDIRECT bypasses the qdisc layer, so there's no buffering if the hardware can't keep up. So if the traffic generator is bursty I wouldn't be surprised if it could overwhelm the hardware...

I also thought about the fact that the network device cannot keep up

with the speed or the copying takes too long. But I tested the same

setup without limiting the traffic generator in throughput. This

generates around 4Gbit/s of 1500B packets and results in around

1Gbit/s of packets on eno5. So the speed can be achieved, but

somewhere in my admittedly confusing setup I lose / drop around 70% of

the packets.

Well, it can still be overflowing the hardware buffer if it is bursty.

For instance, say the packet generator generates 100 packets

back-to-back, then pauses for a little while, then generates another 100

packets, etc. If the hardware packet buffer is only 30 packets, 70 of

those 100 packets are still going to get dropped on the floor as they

are generated, and then once the hardware has sent those 30 packets,

it'll go idle for a little while until the next burst of 100 packets

arrives (where 70 more packets will get dropped). Etc.

And if this is the case, when you're removing the rate-limit on the

traffic generator, you'll just decrease the interval between the bursts,

so the idle interval is shorter, but you still drop most of each burst

on the floor. Makes sense?

>> or maybe the packet generator is not generating complete packets

>> (checksum error?). Regarding the packet generator part, this would

>> mean the packets would be dropped by the kernel on the receiving

>> machine, right? Because this is not the case.

> Not necessarily. There could be a check in the driver or hardware. Have you looks at the ethtool counters? (ethtool -S)?

So on the receiving machine I do see all packets that are seen by the interface eno5. That includes the counters from ethtool -S.

On the sending machine I count only the correctly redirect packets when using ethtool -S.

I also checked dropwatch again and now it is spitting out:

```bash

<num> drops at generic_xdp_tx+f1

```

So that seems to make sense but the question now is why? :)

This sounds like it's consistent with what I explained above...

|

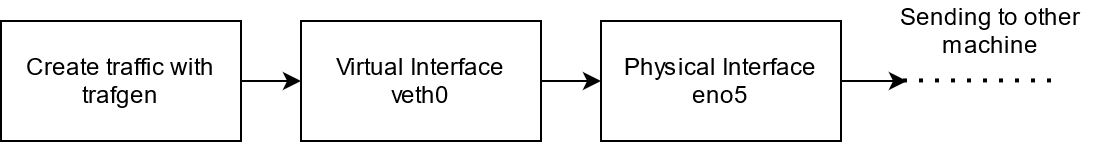

I am currently implementing a program that modifies packets in the egress direction using XDP (in the generic mode because the interfaces do not support driver mode). Therefore I send packets into a virtual interface and redirect these packets towards the ingress direction (using eBPF TC) of the interface I want the packets to modify on (see image below). To then transmit the packets, the XDP program redirects those modified packets back on the same interface in the egress direction. I tested both bpf_map_redirect and bpf_redirect for this second redirect. I know that in my case it is probably easier to use eBPF TC for this modification but I found an issue with this setup. The setup looks like the following:

The first redirect (Step 3) is working fine and with the performance numbers expected. But the second redirect at step 4 (that is from the interface we modified the packets on towards the same interface in egress direction using XDP and bpf_map_redirect / bpf_redirect) is dropping always around 70% of the incoming packets. That is for 1 Gbit/s (size 1500B) around 300 Mbit/s are achieved. The interesting part is now that the 70% seem to be consistent. When I am sending 4 Gbit/s of traffic (size 1500B) into the virtual interface I achieve 1 Gbit/s on the physical interface in the egress direction (Step 5). Therefore I know that the machine is theoretically capable of redirecting this amount of traffic.

I could reproduce the issue when only using the eBPF TC redirect towards the modifying interface (Step 3) and a minimal XDP program which redirects the packets directly using both bpf_map_redirect and bpf_redirect.

The eBPF TC program (step 3):

and the XDP program (step 4):

Distro: Ubuntu 20.04 LTS

Kernel: 5.4.0-45-generic

The drivers used for the physical interface:

I already tried multiple things:

-> No drops, expected performance. So the issue seems to be with traffic generated on the sending machine maybe just a configuration error?

-> Again 70% drops

-> Same drops of 70%

Tracing the packet drops with dropwatch showed me the following (exemplary) result:

"47040 drops at kfree_skb_list+1d (0xffffffffabf1e06d) [software]"

I'm running out of ideas what to try next and if it is my fault or some weird behaviour in XDP. I know that the use of XDP in my case is a little off but I still want to know why this behaviour appears.

The text was updated successfully, but these errors were encountered: