-

-

Notifications

You must be signed in to change notification settings - Fork 5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

BUG: PyPI requires on 1.7.1 should allow Python 3.10+ #14738

Comments

|

We don't support Python versions that are not officially released yet that is to say all bets are off. But we try to see if things are doing OK in CI workflows. So any other compliance issues have to wait until the version is released. 1.7.1 is indeed not aware of future Python versions. |

|

@ilayn : Thanks, this makes sense. |

|

This does not really make sense IMO. I am not suggesting that scipy should properly "support" beta releases of Python i.e. fix issues quickly, but wouldn't it make sense to be ready for when e.g. 3.10 becomes a full release? And whilst it generally makes sense for latest releases to require >= python 3.x (the version that supports specific language features that the version needs), and for older releases to sometimes require < python 3.y (the version that stopped supporting specific language features that this old version required), I am unclear what the purpose is of putting version < python 3.10 on a current package so that pypi automatically installs a lower version that is less likely to support language features in the new beta. |

|

With Python 3.10 released today, I generally agree with @Sophist-UK for why and how this should be fixed -- the current process isn't sustainable for new Python releases, unless scipy updates in lockstep with the latest supported Python. |

So you'd expect scipy to release a new version immediately with a new python version? That's simply not realistic |

@ev-br : Agreed! This bug pertains to PyPI automatically installing scipy 1.6.1 for all Python releases >=3.10. To reproduce:

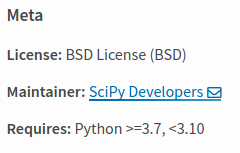

The old scipy 1.6.1 will be installed, not the latest version! From PyPI (https://pypi.org/project/scipy/), scipy 1.7.1 requires Python < 3.10: The most recent release with Python >= 3.7 (unrestricted) was scipy 1.6.1: The recommended fix would be to adjust scipy 1.7.1 to be compatible with Python >= 3.7 . Not limiting the latest version of a package to the latest Python (even beta versions) is what most packages on PyPI do. |

|

@rgommers is probably the expert on setting the max version numbers in |

|

@zachetienne ISTR some discussion that this was a pip problem. EDIT: Wrong workaround. |

|

We will release 1.7.2 with Python 3.10 support very soon. The other part of this is: it would be great to at some point prevent older SciPy versions from being picked up. This is a little tricky to achieve, but can be done by adding a new 1.6.x release which aborts a from-source build immediately with a clear error message. That's something I may get around to at some point, but it needs thorough testing to ensure we're not breaking anything. |

This is done now. I'll close this issue, and will open a separate one for the related topic of preventing older SciPy versions being found for new Python versions. |

|

Sorry if this is in the discussion somewhere, but why is it absolutely necessary to upper cap the It has the drawback of causing It also forces everyone who depends on Specifying an upper cap in the setup.py also goes against the goals for what that file is doing. This is the distinction between "abstract" and "concrete" requirements, right? What would be the downside of not capping it? Do we really expect that every new Python is just going to break I ask because I am running into a problem similar to what @NeilGirdhar describes, where a solver complains because my I am definitely not a packaging expert but to me this post from @henryiii makes a pretty compelling case that libraries should not place upper caps on dependencies, and Python in particular: See also this discussion in particular the points Paul Ganssle makes: I realize |

|

I found some previous discussion on this release PR: (Maybe I should have looked for that before.) There @rgommers says:

Why does a new Python require a new SciPy release? I think in that same discussion @pganssle is making a case against upper caps here: Isn't the last thing we want to do is force domain scientists to think about the complexities of constraints and solvers? And it's not like semver is actually a guarantee of anything. Doesn't the case for not upper-capping apply doubly to Python itself? Like @pganssle said in that discuss.python.org post about placing any kind of upper bound on Python:

Do we really know that any new Python release will cause breaking changes for Sorry for the verbose posts, but I am a bit frustrated with running into this on multiple projects. |

Because (a) it is very likely to not work out of the box, and (b) the vast majority of users are unable to build from source, and we get a lot of bug reports about building from source. It's a very bad idea for libraries as complex as SciPy to have an sdist but no matching wheels for a new Python version, it's much better to get an immediate clear error message.

If it's niche packages that release too slowly, then yes they should not use an upper bound. If it's libraries like SciPy, scikit-xxx, PyTorch, TensorFlow or whatever, then you need to adjust your expectations about everything being available shortly after a new Python release. Python's ABI stability window is simply too short to allow preparing binaries in time. |

|

The problem @NickleDave is complaining about is not the fact SciPy doesn't work on 3.10, but rather that SciPy is setting The good news is this bad solve is only on Python 3.10, where even a good solve would likely not work (assuming SciPy really doesn't support Python 3.10). This has another unpleasant effect. If you use a locking package manager (Poetry, PDM), then the package manager will see that the lock file has a Python cap in it. It will then force the user to add the matching cap, since the lock file will be invalid on Python 3.10. If you are developing a library and don't have a strong cap on SciPy, this is wrong, but it doesn't matter, these systems are prioritizing the lock file over the library dependencies. The bad news is this affects all downstream libraries using a locking package manager on any Python version. IMO, a library's Requires-Python should not depend on the lock file; if a system wants to force you to be truthful for a lock file's sake, that should be a separate way to do this that does not get exported. Or it could just print a warning ("this lock file will only be valid on Python x to y") But nether @frostming's PDM nor Poetry support this, as far as I know. Until this is fixed, I think the only advice I can give is to avoid locking package managers for developing libraries. Footnotes

|

|

If you really are more worried about building from source than about actual incompatibilities (as long as you don't see warnings, the next Python release is supposed to work - unless you are depending on private details like bytecode like Numba does), the the correct solution would be to ask users to include a pip flag or environment variable, like |

|

Maybe we start with why we are doing instead of what we are doing. The thing we have been struggling so far with is that new Python versions without any exceptions were breaking something small or big, every single major version mind you. Hence for us it is not a matter of probability anymore but a certainty that something changes in Windows how DLL is looked up or some Linux detail or Apple just decides to release an architecture with zero documentation or compilation guideline and ABI changes etc. etc. with every Python release. For that reason, we don't want current versions to be installed on new Pythons that were never meant to be running on. Because we are 100% sure that something will break and we will have users opining bug reports and issues coming in for the next 18 months about it overlapping with yet another release in the meantime. That is the most annoying thing since we don't even know which version is more broken to prioritize the solutions. I wish it wasn't like that but it is what it is. And obviously, this causes a great deal of influx of bug reports and takes a good amount of maintainer time to even find the problem let alone fixing it. So what we are continuously dealing with is to limit the scope of SciPy version to certain Python versions, certain architectures (ARM, Win 32bit, obscure stuff) and certain 3rd party binaries. We are trying to be as flexible as we can but maintainer time is VERY precious and not getting cheaper anytime soon. Thus we are, probably wrongly, using certain tools to achieve this. Maybe there are better ways about this, I don't know. But So given a very brief explanation of what our issue is, I'd like to ask for help from people in this thread, what a the good solution might be instead of discussing one choice we made in particular. Because without an alternative, we cannot stop doing this for reasons I summarized above. Asking users to do anything different than |

|

I don't know what the technical solution might be, but as a user of SciPy I know what I want to happen - I want to get the latest version of SciPy that works on my version of Python. If there is no version of SciPy verified for my version of Python (i.e. because it is too new or a beta or too old), I want to be told that I need to use a different version of Python. What I don't want is for PyPi to install an earlier version of SciPy which is equally invalid for the version of Python I am running because (as actually happened) the earlier version was NOT limited to the Python versions it would install against. (In other words, to some extent this is an issue with older versions NOT being constrained rather than current version being constrained.) |

Yes, this is what I mentioned - you'd have to "yank" every older release of SciPy to get this to "work". But even then, this is still ridiculous - Pip will continue to check all older versions before giving up and saying there's not a matching version. And it has the other side effect that it causes locking package managers to force a downstream library to cap, even if SciPy eventually will release a Python 3.10 compatible version. If you remove the cap, and instead just add the following to the setup.py: if sys.version_info >= (3, 10):

raise RuntimeError('Only Python versions >=3.8,<3.10 are supported.')Now you don't get a back-track of all SciPy versions, and have a customizable error message.

What would you expect it to be called? It's not the "min python version", since when it was introduced people were expected to set it to things like |

I am not sure that this is the answer either. As I think this will allow Pypi to offer an incompatible version and then give an error message. I think the answer may be to revisit the definitions of all previous versions and update the Pypi metadata so that it only offers versions of Pypi which match the version of Python that pip is running under. |

I like this solution a lot. This should be done not just for Python versions, but also for all dependencies. Even when projects use semantic versioning, patch versions (of project A) sometimes break other projects (B). It would be nice for those projects B to be able to retroactively mark themselves as incompatible with the new patch version of project A. New versions of project B that are compatible can go back to being constrained only by major version. Because there is no facility for retroactively updating dependencies, then in the above scenario, not only is B broken, but users of B are broken, and they often have to constrain project A until B releases a new version, at which point they can un-constrain. However, this solution doesn't fix the problem with locking package managers. As soon as a new version of Python comes out, then scipy has to mark itself as incompatible with it. At that point, the locking package managers will (using current behavior) not support any version of scipy on a project that supports the new Python. I think this behavior of locking package managers needs to be changed as well. Your project saying that it requires, say, 3.7–3.10 should not mean that every dependency has to. It just means that you're only as constrained as your dependencies. Poetry should resolve a lock file for the Python version of the environment, only. |

|

PyPI metadata is immutable. |

Is it not possible for that to be changed? |

I don't know exactly what it is supposed to be used for (and apparently not for the thing we used for) but if you call require python, I am expecting it to constrain the python versions to be used with and error out if it is not. Instead we fire up a time travel tree building to find previous versions. This, to my narrow understanding, is overengineering to solve a problem I am not sure exists. |

|

Please read my post. Users are not broken if they need to add constraints. They might be irritated and noisy, but they are not broken. They are broken if they can't solve due to over constraints. And if they are using an old version, it might be because some else capped SciPy. There have been some ideas about allowing metadata to be updated afterwords, but it's not trivial and breaks security through hashing. You could have a metadata file that "goes along" with the wheel, but that complicates things. The "solution" you are proposing today would be to yank all previous releases, and then release new patch or post versions of every minor SciPy release. With new binaries. A better solution is to remove the misusage of Requires-Python, and occasionally release new old patch releases if a really bad dependency failure starts happening. |

|

I am not sure if that is directed to me but I can't understand the rationale for digging the history if the latest version does not support Python 3.10 in the hopes that maybe earlier versions might support it. I have no examples of this in any package I have dealt with so far. Which is why we used Require-Python because it didn't occur to us that this is going to be the case. |

One problem with your solution is that if X depends on scipy<1.6, and X and scipy are both unconstrained on Python version, then pip will happily try to install them on new Python versions. It shouldn't be X's maintainer's job to figure out that scipy is incompatible with that new version. You bring up a lot of good points about the complications involved in updating the metadata, but really that is the ideal world solution. |

|

Requires-Python is a free form string that gets parsed into a packaging SpecifierSet. It has no understanding of 'maximum' or 'minimum'. It can only answer one question: does a version of Python (including patch!) match it? It returns true of it does. So if it "fails", pip doesn't know why, and assumes that it needs to keep looking for one that passes. It was developed for IPython, as they were the first to want to drop Python 2 support from their main releases and just have an LTS release for Python 2. It's now a key component of NEP 29 - the fact the latest libraries don't have new releases for Python X doesn't stop Python X from being used. This obviously doesn't support upper caps properly. That was never the intention of metadata - it is supposed to help fix a broken solve, not break a solve. That's why there is no requires-arch, etc. Which, by the way, was about half the complaints above - Apple released Apple Silicon on the existing 3.8, so a cap would not have fixed that. Use setup.py to throw an error if you want to intentionally break something with a nice error. And, yes, updating metadata would be nice from a package's standpoint. It's just a mess from all other standpoints. :) Doesn't mean it's impossible someday AFAIK, it just needs someone willing to work it out. |

I understand the "older version now gets found" is a problem. The solution is gh-14986, which I hope to revisit during the Christmas holidays. Which is basically the same as you are suggesting @henryiii. Except I think we have better metadata, and I hope the behavior of Pip at all can improve over time. I don't think downloading an sdist and then erroring out is ideal, but that's all we've got because metadata on PyPI is unfortunately immutable indeed.

I disagree that this is mis-usage. It adds the metadata we want to add. The raising an exception inside an sdist is an unfortunate hack that yes we'll have to do somewhere to stop old versions from being found. The Poetry/PDM behavior is a usability issue on their side. The whole "adding an upper bound is wrong": no need to repeat that argument. The bounds are desired for us. By the way, this kind of thing is the reason that other projects with complex builds don't upload an sdist at all (e.g., TensorFlow, PyTorch). Unless PyPI/pip usability improves (most importantly, allowing us to default to @henryiii I know you understand this stuff well, I'd appreciate your comment on gh-14986 as the solution. |

|

Making all metadata immutable is crazily stupid - and this is an example just why that is. Yes - for security reasons, most of the metadata should be immutable except metadata relating to co-dependencies - because you never know when co-dependency constraints are going to need to change. I haven't fully thought it through - but it might be possible to only allow increased constraints on existing releases, rather than removal of constraints. |

|

It's I think better to have that discussion on Python repo/forum/list. Here it won't solve anything though I am interested to hear the pros/cons |

|

@rgommers everything you just said totally makes sense, and yes after @henryiii explained it more clearly, I agree that it's mainly a usability issue w/Poetry, pdm, et al. Likewise @ilayn I understand much better now that new Python releases do quite literally break Definitely sounds like #14986 is a short-term fix that address the core issue here. |

This is tied to how the entire system works. Every file is standalone and contains its own metadata - wheels and SDists. Files are hashed; you can never upload two different wheels with the same name but different hashes. If you use pip-tool's compile, you'll get a requirements.txt that looks like https://github.com/pypa/manylinux/blob/main/docker/build_scripts/requirements3.10.txt - the hashes are listed for each file. You can't change the files; that's a core security requirement. So the only way to modify the metadata would be to add a new file that sits "alongside" the wheel or SDist file - probably each one, since each file technically could have different metadata, and then modify setuptools, flit, and every other build system to produce them, pip and every other installer to include them, pypi and every other wheelhouse to include them, pip-tools, Poetry, Pipenv, PDM, and every other locking system to handle them somehow, etc. And the incoming PEP 665. And any older version of anything (like pip) would not respect the new metadata. And, you'd have to be very careful to only allow a very limited subset of changes - you wouldn't want an update of a package to add a new malicious dependency via metadata that you could not avoid via hashes! Would you be able to get old versions of dependency override files too? Plus other questions would need to be answered. It could be done, but it would be a major undertaking, for a rather small benefit - ideally, everyone should try to avoid capping things, play nicely with deprecation periods, test alpha/beta/rc, and just understand that in the real world, a library can't perfectly specify it's dependencies so that there will never be a breakage. That's only possible when locking, for applications. You should at least consider the scope of the problem and the other aspects before you call something "crazy stupid". In fact, do other non-Python systems allow changing metadata? I think many of them do not. And it's really easy to "fix" by releasing a new patch release with more limited metadata - this just is not ideal for large binary releases, but is fine for pure Python / smaller packages. If someone carefully researches this, finds a core-dev sponsor to work with (I can suggest a few), and is willing to push this through, that's what it would take to make it happen. The first step would be to discuss it on the Python forums. |

Currently, Requires-Python is not for upper limits. Trove classifiers are for indicating if something was tested on a newer version of Python, not Requires-Python. This is only for removing Python versions you previously did not support. This is why you are having to come up with workarounds to fix the problems created by adding it in the first place.

I agree here. Though it's a major user base and I think you should at least think about users being affected by it, rather than just saying it is their fault.

I described a way to add an upper bound in setup.py - I was not saying you should not have one. You can add metadata via classifiers. There's no need to misuse Requires-Python for this. Your workaround still requires pip to "scroll back" and try to install an old version, you are just breaking that old version. Your workaround is not a horrible workaround, and it's only marginally worse than the "ideal" way to do it. It's better than numba, which scrolls back to version 0.51 then breaks because llvm-config is not present to build LLVMLight, never making it to the failure message. I do have an alternate idea for a workaround. You can upload empty binary packages for the next version of Python once the RC comes out. These empty binary packages have a single dependency on "python310-unsupported" or something like that - remember that I mentioned that dependencies are per-file, not per-release (please tell Poetry that). That has several benefits: you can upload them to an existing release, you can (and must) wait till Python 3.x is in the RC phase, so you can see if it just happens to work before uploading them, it gives the correct result even if some of your dependencies won't build (since they are not included), and it still allows advanced users like packagers to download from source and build if they are testing it out. I've seen this idea floated for the manylinux1 removal coming in two weeks. Now, there is a possibility - we could change the meaning of Requires-Python to also handle upper bounds. I don't know how much traction that would gain - Requires-Python was added (originally at the request of the data science community) to assist with dropping older versions of Python. Making it harder to update to a new version of Python might not go over all that well. But there's enough incorrect usage already that maybe just handling it by ignoring upper caps would be fine - fine by me, anyway. :) Anyway, the "steps" would be to add a way to compute range overlaps with SpecifierSets (I already want this for something else, actually, but it's not trivial), then to add / suggest special handing for installs when the current version + all future versions are excluded by Requires-Python - either it could be ignored, or a nice message could be generated. This would "remove" the ability to drop a newer version of Python, but that's not really useful to the best of my knowledge. Or the meaning of the slot can be very specifically clarified to only refer to dropping previously supported Pythons, and Setuptools/flit, etc could start producing warnings if anyone adds an upper cap. I'd be fine with that too. ;) |

|

@henryiii The PEP and the PyPA Core Metadata specification both say:

The core spec also gives |

|

I'm going to bring this up and see if the situation can be improved. I think there are three options that all would be better than the current implementation. For two of them, I think that wording needs to be tweaked, too, as it's terrible. I'll try to link the discussion here once I start it (1-2 days). |

|

I've started a discussion here. https://discuss.python.org/t/requires-python-upper-limits/12663 |

|

Thanks @henryiii much appreciated. |

@rgommers Your workaround will not support locking package managers. I've tested both Poetry and PDM. If you add a basic file like this: [project]

name = ""

version = ""

dependencies = [

"numba",

]

requires-python = ">=3.8"

[build-system]

requires = ["pdm-pep517"]

build-backend = "pdm.pep517.api"

(Or the matching, non-PEP 621 version for Poetry) (using numba since SciPy currently supports 3.10) then PDM/Poetry will back solve to the last version that "supports" all future versions of Python (the last one without an upper limit, 0.51), and will lock that. Which of course breaks, even if you are currently running on Python 3.9! Either every single version ever released without a cap has to be yanked1, or you could use the fake wheel suggestion I have above. But this hacky solution will not work properly, the metadata still lies. I'd recommend waiting (or removing the upper limit) until https://discuss.python.org/t/requires-python-upper-limits/12663 has some sort of resolution. Footnotes

|

|

Thanks for trying that @henryiii!

This is, unfortunately, incorrect. Pip will still pick up yanked sdists for old releases if it cannot find another match. I recent had to hard-delete very old (<= 0.3.1) PyTorch sdists from PyPI because they kept being reported as not working by users despite being yanked. The relevant PEP (I forgot which one) says yanked versions MAY be removed, not MUST. I think that was a mistake, it makes zero sense to try to install yanked old releases for a simple |

|

That's the difference between yanking and deleting - yanking tries to avoid selecting the package, but will still select it if no otherwise it would not solve. I would guess in your case someone had a pin or upper cap on an old version of PyTorch (probably from some other dependency so it was not obvious) making the yanked PyTorch SDists the only valid solve. (At least that's supposed to be the way it works, I wouldn't promise no bugs). "automatically selected if not specified" - so yes, this was simplified. In other words, if the final solve is 0.3.*, and all 0.3.x release are yanked, then the latest 0.3.x release will be selected anyway. But a non-yanked release will be preferred over a yanked release if possible. |

|

Those were the only releases with sdists, so a simple |

|

Ahh, yes. So if you are asking for an SDist only release or if you are are on a platform with no binaries at all, those would be selected. Without the SDists, they would get a broken solve instead. Yanking does not break a solve, that's the idea. |

Describe your issue.

With Python 3.10rc2 installed,

pip install scipyinstalls scipy 1.6.1 (the most recent version on PyPI that does not restrict Python <3.10) and not 1.7.1, resulting in a rather broken install. I get a lot ofUndefined symbol: _PyGen_Sendwhen running ordinary code. It looks like on PyPI scipy 1.7.1 requires Python < 3.10.

Installing master

pip install git+https://github.com/scipy/scipyfixes the problems.Reproducing Code Example

Error message

SciPy/NumPy/Python version information

3.6.1

The text was updated successfully, but these errors were encountered: