-

Notifications

You must be signed in to change notification settings - Fork 28

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

NERSC Perlmutter multiple-node run issue #112

Comments

|

That strange feature looked like a lightcone. So that’s probably somewhere

to start checking. Doing a regression analysis to find which PR produced

this error could also help.

Could you also quickly check if the number of particles is correct? Could

it be that you are reading from a wrong catalogue or particle type? Are the

two runs with same number of particles?

Another thing to try is running with 128 ranks but across 2 nodes each node

using 64 ranks. Does that give the same strange output?

Yu

…On Thu, Jan 26, 2023 at 5:50 PM Biwei Dai ***@***.***> wrote:

Hi,

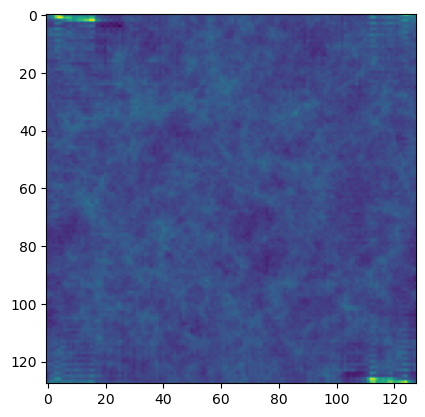

I run FastPM with two nodes (256 MPI ranks) on Nersc Perlmutter, but got

the following results at z=0:

[image: fastpm_2node]

<https://user-images.githubusercontent.com/21130167/214989492-34ecf9b8-8b14-4a42-adb1-9d3278729d95.png>

I got this strange structure at top left and bottom right. We can also see

the grid corresponds to different ranks?

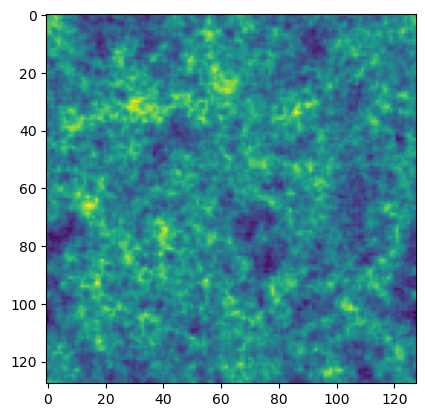

This only happens with multiple nodes. It works well with a single node

(128 MPI ranks) on Perlmutter:

[image: fastpm_1node]

<https://user-images.githubusercontent.com/21130167/214989722-28be21ee-473f-4779-b011-555228730941.png>

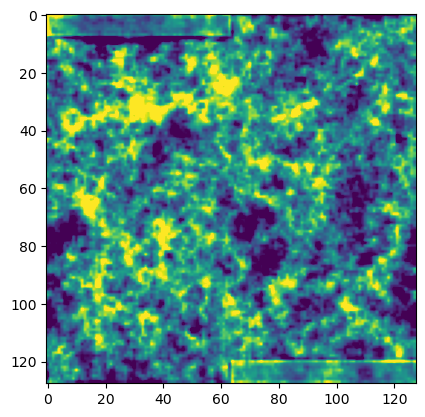

This is the linear density field generated with two nodes, which looks

fine to me:

[image: lineardensity_2node]

<https://user-images.githubusercontent.com/21130167/214991211-2da69d01-bff0-44e4-8af3-69dbe81a6338.png>

The IC is generated at z=9 with 2LPT. This is the snapshot at z=9:

[image: z9_2node]

<https://user-images.githubusercontent.com/21130167/214991547-439b2e48-77cc-4544-a20e-93a1259bb991.png>

The code is compiled with Makefile.local.example. I am not sure whether I

compile it correctly (there are some warnings but no errors during

compilation)?

—

Reply to this email directly, view it on GitHub

<#112>, or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTBMP3CKFTPUEFNNB43WUMSXJANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you are subscribed to this thread.Message

ID: ***@***.***>

|

|

Then I think we ruled out it is an simple error like reading in some

lightcone data.

If the linear density looked right then the problem is likely from the all

to all exchange of particles eg if we receive zeros or junk there. Some MPI

implementations had that sort of bugs before. Could also be a bug in

snapshot IO where we sort the particles.

if you compare two runs of the same number of particles and same number of

processes but using 1 va 2 nodes, could you identify a pattern?

Does the problem also show up if you only run the 2 LPT or ZA step? If so

then let’s try to find some patterns from its output.

How many threads per process did you use? Let’s fix that to one to rule out

bugs in the openmp library and compiler.

On that thread — Did you compile with gcc?

Yu

…On Thu, Jan 26, 2023 at 8:48 PM Biwei Dai ***@***.***> wrote:

Thanks for the fast reply!

I didn't enable the lightcone outputs. Does the code still compute

lightcones?

Could you explain what regression analysis and PR mean?

The number of particles is correct. I used the same script to run FastPM

and read the files. The only difference is the number of nodes and MPI

tasks. With one node, I tried lots of different particle numbers and

different MPI tasks, and they all work well. With multiple nodes, I also

tried several different particle numbers and MPI tasks, and they all fail

in a similar way.

Here is another run with 1024^3 particles and 512 MPI tasks (4 nodes):

[image: fastpm_n1024_4node]

<https://user-images.githubusercontent.com/21130167/215010698-5bca0585-d6a5-4934-ac1d-6c4a441b2abc.png>

And here is another run with 1280^3 particles and 640 MPI tasks (5 nodes).

[image: fastpm_5node]

<https://user-images.githubusercontent.com/21130167/215010714-3b6478ef-1b40-4412-81f0-f94045f0e8c6.png>

Seems like this strange feature strongly depends on the number of nodes. I

will try 2 nodes 128 ranks.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTARWYVBOMW3DYQ4PBLWUNHQJANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

I submitted the job and will do the comparison between 1 node and 2 nodes. I think the z=9 snapshot plot in my first post is just 2LPT, since the IC is generated at z=9? We can already see two rectangles there at top left and bottom right. I run FastPM with OMP_NUM_THREADS=1, but compiled it with -fopenmp, And yes, I compiled with gcc. |

|

Thanks. Also worth trying if switching to a different MPI library can help.

…On Thu, Jan 26, 2023 at 9:50 PM Biwei Dai ***@***.***> wrote:

I submitted the job and will do the comparison between 1 node and 2 nodes.

I think the z=9 snapshot plot in my first post is just 2LPT, since the IC

is generated at z=9? We can already see two rectangles there at top left

and bottom right.

I run FastPM with OMP_NUM_THREADS=1, but compiled it with -fopenmp, And

yes, I compiled with gcc.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTBIDBKAEAY3OB3WP3TWUNO3VANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

That's good progress, knowing more particles are wrong then right. Are

there velocities very off (by order of mag? in which direction?)

What if you further shrink this down to saying just 4 ranks or 2 ranks? I

think in general having a smaller reproducer will help debugging by

reducing the turnaround time. (

Most of FastPM sends data around as bytes -- this is correct only if we are

assuming nodes are of the same architecture.

One potential issue I can see, is that maybe perlmutter nodes are

heterogeneous? This is very unlikely but I did hear the machine is sort of

innovative on many fronts.

Anyhow, our main strategy for fixing this would be isolating to the very

first point of divergence between the 2 runs.

How does DX1 and DX2 compare between the 2 runs, near the beginning of the

run?

If you compare the log files, maybe some of the statistics of the particle

properties start to diverge at some point, and we can further isolate the

failing module?

Adding more logging of the 'statistics' of particles will help narrowing

this down too.

(BTW, did you try using a different compiler / MPI implementation?)

- Yu

…On Sat, Jan 28, 2023 at 2:42 PM Biwei Dai ***@***.***> wrote:

2LPT at z=9 with 1 node 128 MPI tasks:

[image: 2lpt_1node]

<https://user-images.githubusercontent.com/21130167/215293213-c3fee7ca-b839-427d-ab7c-0750535e0621.png>

2LPT at z=9 with 2 node 128 MPI tasks:

[image: 2lpt_2node]

<https://user-images.githubusercontent.com/21130167/215293232-8274b9e5-a5e4-460f-b76d-24b605d314c5.png>

These two plots use the same colorbar. It seems that 2 nodes give larger

density contrast, and the strange rectangles at top left and bottom right.

6.25% of the particles are the same between the two runs. I plot the

particles that are the same between the two runs, and here are their

locations:

[image: 2lpt_samep]

<https://user-images.githubusercontent.com/21130167/215294339-d0affe2b-f201-4df6-a37c-64ab19b61ea4.png>

So it seems that the MPI ranks at top left and bottom right are correct,

and the other MPI ranks are wrong?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTBIHYG6HBGMTUZRBSLWUWOERANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Yes I tried to use openMPI (I was using cray MPICH before), but got some different problems with running the code with openmpi and mpirun. Most of the time the jobs just fail, but with some certain setup I get correct results with 2 nodes. I have submitted a ticket to NERSC for help. Here is the link to the ticket : I don't think Perlmutter supports intel compiler, so we cannot use intel MPI? I will check the other things you mentioned. |

|

The code seems correct with 2 node 2 task, but fails with 2 node 4 tasks. DX1 and DX2 are correct in rank 0 and rank 3, but both of them are wrong in rank 1 and rank 2. Looking at the log files, the first deviation happens with sending/receiving the ghosts before 2LPT. Here is the log file from the correct run (1 node 4 task, 4^3 particles): Sending ghosts: min = 0 max = 0 mean = 0 std = 0 [ pmghosts.c:173 ] And here is the log file from the incorrect run (2 node 4 task, 4^3 particles): Sending ghosts: min = 0 max = 16 mean = 8 std = 8 [ pmghosts.c:173 ] |

|

The difference in number of ghosts could be legit, as there are more ranks.

Could you share the log files?

It could be that the properties of ghosts received are incorrect. (Not the

same as what was sent) we’ll be able to tell if we add a few logging lines

to dump the ghost properties before send and after recving.

Yu

…On Sun, Jan 29, 2023 at 12:41 PM Biwei Dai ***@***.***> wrote:

The code seems correct with 2 node 2 task, but fails with 2 node 4 tasks.

DX1 and DX2 are correct in rank 0 and rank 3, but both of them are wrong in

rank 1 and rank 2.

Looking at the log files, the first deviation happens with

sending/receiving the ghosts before 2LPT. Here is the log file from the

correct run (1 node 4 task, 4^3 particles):

Sending ghosts: min = 0 max = 0 mean = 0 std = 0 [ pmghosts.c:173 ]

Receiving ghosts: min = 0 max = 0 mean = 0 std = 0 [ pmghosts.c:177 ]

And here is the log file from the incorrect run (2 node 4 task, 4^3

particles):

Sending ghosts: min = 0 max = 16 mean = 8 std = 8 [ pmghosts.c:173 ]

Receiving ghosts: min = 0 max = 16 mean = 8 std = 8 [ pmghosts.c:177 ]

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTAGVHP3E4JM425YIZTWU3IXTANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Also how does the four ranks run compare with four ranks on a single node?

Did 4 ranks on a single node give you the right result?

…On Sun, Jan 29, 2023 at 5:05 PM Feng Yu ***@***.***> wrote:

The difference in number of ghosts could be legit, as there are more

ranks.

Could you share the log files?

It could be that the properties of ghosts received are incorrect. (Not the

same as what was sent) we’ll be able to tell if we add a few logging lines

to dump the ghost properties before send and after recving.

Yu

On Sun, Jan 29, 2023 at 12:41 PM Biwei Dai ***@***.***>

wrote:

> The code seems correct with 2 node 2 task, but fails with 2 node 4 tasks.

> DX1 and DX2 are correct in rank 0 and rank 3, but both of them are wrong in

> rank 1 and rank 2.

>

> Looking at the log files, the first deviation happens with

> sending/receiving the ghosts before 2LPT. Here is the log file from the

> correct run (1 node 4 task, 4^3 particles):

>

> Sending ghosts: min = 0 max = 0 mean = 0 std = 0 [ pmghosts.c:173 ]

> Receiving ghosts: min = 0 max = 0 mean = 0 std = 0 [ pmghosts.c:177 ]

>

> And here is the log file from the incorrect run (2 node 4 task, 4^3

> particles):

>

> Sending ghosts: min = 0 max = 16 mean = 8 std = 8 [ pmghosts.c:173 ]

> Receiving ghosts: min = 0 max = 16 mean = 8 std = 8 [ pmghosts.c:177 ]

>

> —

> Reply to this email directly, view it on GitHub

> <#112 (comment)>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AABBWTAGVHP3E4JM425YIZTWU3IXTANCNFSM6AAAAAAUIFPAQU>

> .

> You are receiving this because you commented.Message ID:

> ***@***.***>

>

|

|

I was using 4 ranks for both runs (1 node * 4 rank v.s. 2 node * 2 rank/node), so they should give the same results? I printed the particle information before 2LPT (uniform grid). With 2 nodes, rank 0 and rank 3 are correct. But the particles in rank 1 should belong to rank 2, so rank 1 sends all of its particles to rank 2. Similarly, the particles in rank 2 should belong to rank 1, so rank 2 sends all of its particles to rank 1. Could you think of any reasons that could cause this kind of bug? |

|

The computation of the domain boundaries could be buggy?

Are the particle positions all wrong on the wrong ranks, or they are the

same as the 1 node run, but somehow the 2 node run wants to send them?

This can happen if one part of the program got a different value from MPI

comm get rank. One possibility is e.g the pfft library linked to a wrong

version of mpi?

Not sure how to check that. Maybe add logging of pid and the rank inside

pfft plan, and also in fastpm itself.

Yu

…On Sun, Jan 29, 2023 at 5:24 PM Biwei Dai ***@***.***> wrote:

I was using 4 ranks for both runs (1 node * 4 rank v.s. 2 node * 2

rank/node), so they should give the same results?

I printed the particle information before 2LPT (uniform grid). With 2

nodes, rank 0 and rank 3 are correct. But the particles in rank 1 should

belong to rank 2, so rank 1 sends all of its particles to rank 2.

Similarly, the particles in rank 2 should belong to rank 1, so rank 2 sends

all of its particles to rank 1. Could you think of any reasons that could

cause this kind of bug?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTCNPFUUEET35T6PI63WU4J6PANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

The particle positions are all wrong on the wrong ranks (i.e., they are not the same as the 1 node run).

Indeed. I checked "pm->IRegion.start" and "pm->IRegion.size" returned by "pm_init" function and they are incorrect. Is this because I didn't compile pfft correctly? Do I need to modify these two lines in Makefile.local? PFFT_CONFIGURE_FLAGS = --enable-sse2 --enable-avx Why does it work on one node, but fails on several nodes? |

Thanks! This is exactly what happens! The fastpm comm (MPI_COMM_WORLD) and pfft comm (Comm2D from "pfft_create_procmesh") give me different rank values. How can I fix this issue? |

|

I’d forward this information to NERSC. Could you check pfft-single.log and

see if the compiler and moo being used are the same as the one used by

Fastpm?

It could be the lines passing along CC or MPICC were not doing the right

thing, but unlikely due to those flags you mentioned. What was the

makefile.local of fastpm?

…On Mon, Jan 30, 2023 at 2:08 PM Biwei Dai ***@***.***> wrote:

This can happen if one part of the program got a different value from MPI

comm get rank. One possibility is e.g the pfft library linked to a wrong

version of mpi?

Thanks! This is exactly what happens! The fastpm comm (MPI_COMM_WORLD) and

pfft comm (Comm2D from "pfft_create_procmesh") give me different rank

values. How can I fix this issue?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTD2QV4BF2XQS2IOJJ3WVA3XHANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Thanks. I also opened a ticket at NERSC. If you contact NERSC you could mention the ticket INC0197829. I am not sure what moo is. The compiler seems to be cc, the same as fastpm. Here is the pfft-single.log file: My Makefile.local is very similar to Makefile.local.example: CC = cc I also tried CC = mpicc, but it doesn't make a difference. |

|

Maybe related to static vs dynamic compiling. See if adding -static to

Makefile.local’s CC helps.

…On Mon, Jan 30, 2023 at 7:44 PM Biwei Dai ***@***.***> wrote:

Thanks. I also opened a ticket at NERSC. If you contact NERSC you could

mention the ticket INC0197829.

I am not sure what moo is. The compiler seems to be cc, the same as

fastpm. Here is the pfft-single.log file:

pfft-single.log

<https://github.com/fastpm/fastpm/files/10542465/pfft-single.log>

My Makefile.local is very similar to Makefile.local.example:

CC = cc

OPTIMIZE = -O3 -g

GSL_LIBS = -lgsl -lgslcblas

PFFT_CONFIGURE_FLAGS = --enable-sse2 --enable-avx

PFFT_CFLAGS =

I also tried CC = mpicc, but it doesn't make a difference.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTHQMOYURMAYLN3MFSTWVCDCRANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

The static compilation fails with the following error:

And here is the compilation output related to pfft:

Here are the config.log and pfft-single.log: By the way, there is a warning for static compilation on Perlmutter (see https://docs.nersc.gov/development/compilers/wrappers/#static-compilation ):

|

|

Probably move the -static to flags (both fast pm and pfft) instead of as

the binary name. This looked like a word split issue.

…On Tue, Jan 31, 2023 at 12:57 PM Biwei Dai ***@***.***> wrote:

*The static compilation fails with the following error:*

Archive file install/lib/libfftw3.a not found.

make[1]: *** [Makefile:39: libfastpm-dep.a] Error 1

make[1]: Leaving directory '/global/u1/b/biwei/fastpm/depends'

make: *** [Makefile:10: all] Error 2

*And here is the compilation output related to pfft:*

(make "CPPFLAGS=" "OPENMP=-fopenmp" "CC=cc -static" -f Makefile.pfft

"PFFT_CONFIGURE_FLAGS=--enable-sse2 --enable-avx" "PFFT_CFLAGS=")

make[2]: Entering directory '/global/u1/b/biwei/fastpm/depends'

mkdir -p download

curl -L -o download/pfft-1.0.8-alpha3-fftw3.tar.gz

https://github.com/rainwoodman/pfft/releases/download/1.0.8-alpha3-fftw3/pfft-1.0.8-alpha3-fftw3.tar.gz

; \

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- --:--:-- --:--:-- 0

100 4408k 100 4408k 0 0 1794k 0 0:00:02 0:00:02 --:--:-- 3748k

mkdir -p src ;

gzip -dc download/pfft-1.0.8-alpha3-fftw3.tar.gz | tar xf - -C src ;

touch

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

mkdir -p double;

(cd double;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --disable-shared

--enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse2 --enable-avx

--enable-openmp "CFLAGS=" "CC=cc -static" "MPICC=cc -static"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-double.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/double':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

mkdir -p single;

(cd single;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --enable-single

--disable-shared --enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse --enable-avx

--enable-openmp "CFLAGS=" "CC=cc -static" "MPICC=cc -static"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-single.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/single':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[2]: Leaving directory '/global/u1/b/biwei/fastpm/depends'

*Here are the config.log and pfft-single.log:*

config.log <https://github.com/fastpm/fastpm/files/10550876/config.log>

pfft-single.log

<https://github.com/fastpm/fastpm/files/10550883/pfft-single.log>

*By the way, there is a warning for static compilation on Perlmutter (see

https://docs.nersc.gov/development/compilers/wrappers/#static-compilation

<https://docs.nersc.gov/development/compilers/wrappers/#static-compilation>

):*

Static linking can fail on Perlmutter

Please note that static compilation is not supported by NERSC, and it was

observed that building statically linked executables can fail as the

compiler wrappers may not properly link necessary static PE libraries.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTAC4SZW6A4ZZLHUV2TWVF4BLANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

I got the errors that "C compiler cannot create executables"

|

|

Somehow this CC compiler is trying to link cuda (according to config.log).

Check if there are suspicious modules loaded?

…On Tue, Jan 31, 2023 at 5:10 PM Biwei Dai ***@***.***> wrote:

I got the errors that "C compiler cannot create executables"

(make "CPPFLAGS=" "OPENMP=-fopenmp" "CC=cc" -f Makefile.pfft

"PFFT_CONFIGURE_FLAGS=--enable-sse2 --enable-avx" "PFFT_CFLAGS=-static")

make[2]: Entering directory '/global/u1/b/biwei/fastpm/depends'

mkdir -p src ;

gzip -dc download/pfft-1.0.8-alpha3-fftw3.tar.gz | tar xf - -C src ;

touch

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

mkdir -p double;

(cd double;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --disable-shared

--enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse2 --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-double.log | tail

checking whether the C compiler works... no

configure: error: in /global/homes/b/biwei/fastpm/depends/double':

configure: error: C compiler cannot create executables See config.log'

for more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

mkdir -p single;

(cd single;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --enable-single

--disable-shared --enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-single.log | tail

checking whether the C compiler works... no

configure: error: in /global/homes/b/biwei/fastpm/depends/single':

configure: error: C compiler cannot create executables See config.log'

for more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[2]: Leaving directory '/global/u1/b/biwei/fastpm/depends'

config.log <https://github.com/fastpm/fastpm/files/10552277/config.log>

pfft-single.log

<https://github.com/fastpm/fastpm/files/10552282/pfft-single.log>

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTDKXMC5N4XN4GU6VXLWVGZYLANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Yes gpu and cudatoolkit are loaded. After removing these two modules, I got back the "PFFT requires an MPI C compiler." error..

Here are the modules I loaded:

I also asked NERSC about static compilation on Perlmutter, and they said that "Static compilation is not supported on Perlmutter." Can we reorder the rank values so that fastpm rank and pfft rank are consistent? Do you think this will fix the problem? |

|

Let’s give up on static then.

I wonder if this has something to do with shifter. Do you know if that’s

enabled universally on Permutter? Shifter dynamically replaced the Mpi

library of a program. If for example it only replaced part of it due to

pfft staticli linked to another one then this may happen.

It is not good that 2 MPI libraries are used in the same program. Usually

making sure we are linking the program correctly is a cleaner solution than

coming up with a hack like that( and I don’t know how to do that neither).

Is there a way to figure out which libraries are used? The cc command from

cray may have a flag to print out the full command line used for fastpm.

The log file in confit.log may contain clues which MPI is used in pfft.

The makefile for pfft does added enable static and disable shared to the

flags maybe that could have caused it to pick up a unsupported mpi?

…On Tue, Jan 31, 2023 at 5:53 PM Biwei Dai ***@***.***> wrote:

Yes gpu and cudatoolkit are loaded. After removing these two modules, I

got back the "PFFT requires an MPI C compiler." error..

(make "CPPFLAGS=" "OPENMP=-fopenmp" "CC=cc" -f Makefile.pfft

"PFFT_CONFIGURE_FLAGS=--enable-sse2 --enable-avx" "PFFT_CFLAGS=-static")

make[2]: Entering directory '/global/u1/b/biwei/fastpm/depends'

mkdir -p src ;

gzip -dc download/pfft-1.0.8-alpha3-fftw3.tar.gz | tar xf - -C src ;

touch

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

mkdir -p double;

(cd double;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --disable-shared

--enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse2 --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-double.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/double':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

mkdir -p single;

(cd single;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --enable-single

--disable-shared --enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-single.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/single':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[2]: Leaving directory '/global/u1/b/biwei/fastpm/depends'

pfft-single.log

<https://github.com/fastpm/fastpm/files/10552491/pfft-single.log>

config.log <https://github.com/fastpm/fastpm/files/10552492/config.log>

Here are the modules I loaded:

Currently Loaded Modules:

1. craype-x86-milan 3) craype-network-ofi 5) PrgEnv-gnu/8.3.3 7)

cray-libsci/22.11.1.2 9) craype/2.7.19 11) perftools-base/22.09.0 13)

xalt/2.10.2 15) cray-pmi/6.1.7

2. libfabric/1.15.2.0 4) xpmem/2.5.2-2.4_3.20__gd0f7936.shasta 6)

cray-dsmml/0.2.2 8) cray-mpich/8.1.22 10) gcc/11.2.0 12) cpe/22.11 14)

gsl/2.7

I also asked NERSC about static compilation on Perlmutter, and they said

that "Static compilation is not supported on Perlmutter."

Can we reorder the rank values so that fastpm rank and pfft rank are

consistent? Do you think this will fix the problem?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTEFY5MYJDZVWJ65FE3WVG6ZVANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Could you share config.log from a usual build?

Yu

…On Tue, Jan 31, 2023 at 5:53 PM Biwei Dai ***@***.***> wrote:

Yes gpu and cudatoolkit are loaded. After removing these two modules, I

got back the "PFFT requires an MPI C compiler." error..

(make "CPPFLAGS=" "OPENMP=-fopenmp" "CC=cc" -f Makefile.pfft

"PFFT_CONFIGURE_FLAGS=--enable-sse2 --enable-avx" "PFFT_CFLAGS=-static")

make[2]: Entering directory '/global/u1/b/biwei/fastpm/depends'

mkdir -p src ;

gzip -dc download/pfft-1.0.8-alpha3-fftw3.tar.gz | tar xf - -C src ;

touch

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

mkdir -p double;

(cd double;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --disable-shared

--enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse2 --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-double.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/double':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/double'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/double'

mkdir -p single;

(cd single;

/global/u1/b/biwei/fastpm/depends/src/pfft-1.0.8-alpha3-fftw3/configure

--prefix=/global/u1/b/biwei/fastpm/depends/install --enable-single

--disable-shared --enable-static

--disable-fortran --disable-doc --enable-mpi --enable-sse --enable-avx

--enable-openmp "CFLAGS=-static" "CC=cc" "MPICC=cc"

2>&1 ;

make -j 4 2>&1 ;

make install 2>&1;

) | tee pfft-single.log | tail

checking for function MPI_Init in -lmpich... no

configure: error: in /global/homes/b/biwei/fastpm/depends/single':

configure: error: PFFT requires an MPI C compiler. See config.log' for

more details

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No targets specified and no makefile found. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: Entering directory '/global/u1/b/biwei/fastpm/depends/single'

make[3]: *** No rule to make target 'install'. Stop.

make[3]: Leaving directory '/global/u1/b/biwei/fastpm/depends/single'

make[2]: Leaving directory '/global/u1/b/biwei/fastpm/depends'

pfft-single.log

<https://github.com/fastpm/fastpm/files/10552491/pfft-single.log>

config.log <https://github.com/fastpm/fastpm/files/10552492/config.log>

Here are the modules I loaded:

Currently Loaded Modules:

1. craype-x86-milan 3) craype-network-ofi 5) PrgEnv-gnu/8.3.3 7)

cray-libsci/22.11.1.2 9) craype/2.7.19 11) perftools-base/22.09.0 13)

xalt/2.10.2 15) cray-pmi/6.1.7

2. libfabric/1.15.2.0 4) xpmem/2.5.2-2.4_3.20__gd0f7936.shasta 6)

cray-dsmml/0.2.2 8) cray-mpich/8.1.22 10) gcc/11.2.0 12) cpe/22.11 14)

gsl/2.7

I also asked NERSC about static compilation on Perlmutter, and they said

that "Static compilation is not supported on Perlmutter."

Can we reorder the rank values so that fastpm rank and pfft rank are

consistent? Do you think this will fix the problem?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTEFY5MYJDZVWJ65FE3WVG6ZVANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Here are the compilation log files that work on one node. I am not sure about shifter. I will read the NERSC shifter documentation and see if there is any related information. Perlmutter is down now. I can check which mpi libraries cc used after it is back online. Maybe I can remove the --enable-static flag in Makefile.pfft? |

|

On Tue, Jan 31, 2023 at 10:56 PM Biwei Dai ***@***.***> wrote:

Here are the compilation log files that work on one node.

pfft-single.log

<https://github.com/fastpm/fastpm/files/10553952/pfft-single.log>

config.log <https://github.com/fastpm/fastpm/files/10553953/config.log>

nothing looked out of place in these files.

I am not sure about shifter. I will read the NERSC shifter documentation

and see if there is any related information.

Perlmutter is down now. I can check which mpi libraries cc used after it

is back online.

Maybe I can remove the --enable-static flag in Makefile.pfft?

maybe. I wonder if you check the rank pod mapping from bigfile and mpsort

how do they look? Are they also wrong?

All of the dependencies are built as “relocatable” archives, which is

really what enable-static does. The .a files are no more than a bunch of .o

files. Then these .I files are linked into a giant fastpm binary, when

these all worked correctly, only the fastpm binary pulls in a single MPI

dynamically or statically doesn’t make a difference.

What do you get with ldd fastpm?

Yu

… —

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTHGHJPGXNTPU42TFNTWVICJRANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Here is the output of ldd fastpm

For compiler mpicc, here is the output of "mpicc -show"

For compiler cc, I am not sure how to print out its full command line information, since it doesn't recognize "cc -show" or "cc -link_info". I tried disabling the shift modules with "#SBATCH --module=none" in the job script, but it makes no difference: https://docs.nersc.gov/development/shifter/how-to-use/#shifter-modules Removing the "--disable-shared" and "--enable-statc" flags in Makefile.pfft also makes no difference. |

|

These all looked sane. If there is also disagreement of rank between fastpm

and mp-sort, then we have a smaller reproducer of the issue. pfft is kind

of large.

If the only issue is with pfft, then one possible workaround is to also do

enable-dynamic, and then link to pfft dynamically.

Could you also do an objdump -t -T on the fastpm binary? I'd like to see if

there are 2 sets of MPI symbols or something along those lines.

I think at somepoint we also have a command that repackages all of the

dependency .a files into a single large one. It maybe useful if we check if

there are any MPI symbols defined in these .a files (there shouldn't be

any).

…On Wed, Feb 1, 2023 at 12:44 PM Biwei Dai ***@***.***> wrote:

Here is the output of ldd fastpm

linux-vdso.so.1 (0x00007fffef5f8000)

libgsl.so.25 =>

/global/common/software/spackecp/perlmutter/e4s-22.05/75197/spack/opt/spack/cray-sles15-zen3/gcc-11.2.0/gsl-2.7-fhx3zdzzsac7koioqjzpx2uvg4wg4caw/lib/libgsl.so.25

(0x00001461cb6e0000)

libgslcblas.so.0 =>

/global/common/software/spackecp/perlmutter/e4s-22.05/75197/spack/opt/spack/cray-sles15-zen3/gcc-11.2.0/gsl-2.7-fhx3zdzzsac7koioqjzpx2uvg4wg4caw/lib/libgslcblas.so.0

(0x00001461cb4a3000)

libm.so.6 => /lib64/libm.so.6 (0x00001461cb158000)

libcuda.so.1 => /usr/lib64/libcuda.so.1 (0x00001461c9cf4000)

libmpi_gnu_91.so.12 => /opt/cray/pe/lib64/libmpi_gnu_91.so.12

(0x00001461c70d6000)

libmpi_gtl_cuda.so.0 => /opt/cray/pe/lib64/libmpi_gtl_cuda.so.0

(0x00001461c6e92000)

libdl.so.2 => /lib64/libdl.so.2 (0x00001461c6c8e000)

libxpmem.so.0 => /opt/cray/xpmem/default/lib64/libxpmem.so.0

(0x00001461c6a8b000)

libpthread.so.0 => /lib64/libpthread.so.0 (0x00001461c6868000)

libgomp.so.1 => /opt/cray/pe/gcc-libs/libgomp.so.1 (0x00001461c6621000)

libc.so.6 => /lib64/libc.so.6 (0x00001461c622c000)

/lib64/ld-linux-x86-64.so.2 (0x00001461cbbb4000)

librt.so.1 => /lib64/librt.so.1 (0x00001461c6023000)

libfabric.so.1 => /opt/cray/libfabric/1.15.2.0/lib64/libfabric.so.1

(0x00001461c5d31000)

libatomic.so.1 => /opt/cray/pe/gcc-libs/libatomic.so.1 (0x00001461c5b28000)

libpmi.so.0 => /opt/cray/pe/lib64/libpmi.so.0 (0x00001461c5926000)

libpmi2.so.0 => /opt/cray/pe/lib64/libpmi2.so.0 (0x00001461c56ed000)

libgfortran.so.5 => /opt/cray/pe/gcc-libs/libgfortran.so.5

(0x00001461c5222000)

libgcc_s.so.1 => /opt/cray/pe/gcc-libs/libgcc_s.so.1 (0x00001461c5003000)

libquadmath.so.0 => /opt/cray/pe/gcc-libs/libquadmath.so.0

(0x00001461c4dbe000)

libcudart.so.11.0 =>

/opt/nvidia/hpc_sdk/Linux_x86_64/22.5/cuda/11.7/lib64/libcudart.so.11.0

(0x00001461c4b19000)

libstdc++.so.6 => /opt/cray/pe/gcc-libs/libstdc++.so.6 (0x00001461c46f7000)

libcxi.so.1 => /usr/lib64/libcxi.so.1 (0x00001461c44d2000)

libcurl.so.4 => /usr/lib64/libcurl.so.4 (0x00001461c4234000)

libjson-c.so.3 => /usr/lib64/libjson-c.so.3 (0x00001461c4024000)

libpals.so.0 => /opt/cray/pe/lib64/libpals.so.0 (0x00001461c3e1f000)

libnghttp2.so.14 => /usr/lib64/libnghttp2.so.14 (0x00001461c3bf7000)

libidn2.so.0 => /usr/lib64/libidn2.so.0 (0x00001461c39da000)

libssh.so.4 => /usr/lib64/libssh.so.4 (0x00001461c376c000)

libpsl.so.5 => /usr/lib64/libpsl.so.5 (0x00001461c355a000)

libssl.so.1.1 => /usr/lib64/libssl.so.1.1 (0x00001461c32bc000)

libcrypto.so.1.1 => /usr/lib64/libcrypto.so.1.1 (0x00001461c2d82000)

libgssapi_krb5.so.2 => /usr/lib64/libgssapi_krb5.so.2 (0x00001461c2b30000)

libldap_r-2.4.so.2 => /usr/lib64/libldap_r-2.4.so.2 (0x00001461c28dc000)

liblber-2.4.so.2 => /usr/lib64/liblber-2.4.so.2 (0x00001461c26cd000)

libzstd.so.1 => /usr/lib64/libzstd.so.1 (0x00001461c239d000)

libbrotlidec.so.1 => /usr/lib64/libbrotlidec.so.1 (0x00001461c2191000)

libz.so.1 => /lib64/libz.so.1 (0x00001461c1f7a000)

libunistring.so.2 => /usr/lib64/libunistring.so.2 (0x00001461c1bf7000)

libkrb5.so.3 => /usr/lib64/libkrb5.so.3 (0x00001461c191e000)

libk5crypto.so.3 => /usr/lib64/libk5crypto.so.3 (0x00001461c1706000)

libcom_err.so.2 => /lib64/libcom_err.so.2 (0x00001461c1502000)

libkrb5support.so.0 => /usr/lib64/libkrb5support.so.0 (0x00001461c12f3000)

libresolv.so.2 => /lib64/libresolv.so.2 (0x00001461c10db000)

libsasl2.so.3 => /usr/lib64/libsasl2.so.3 (0x00001461c0ebe000)

libbrotlicommon.so.1 => /usr/lib64/libbrotlicommon.so.1

(0x00001461c0c9d000)

libkeyutils.so.1 => /usr/lib64/libkeyutils.so.1 (0x00001461c0a98000)

libselinux.so.1 => /lib64/libselinux.so.1 (0x00001461c086f000)

libpcre.so.1 => /usr/lib64/libpcre.so.1 (0x00001461c05e6000)

For compiler mpicc, here is the output of "mpicc -show"

gcc -I/opt/cray/pe/mpich/8.1.22/ofi/gnu/9.1/include

-L/opt/cray/pe/mpich/8.1.22/ofi/gnu/9.1/lib -lmpi_gnu_91

For compiler cc, I am not sure how to print out its full command line

information, since it doesn't recognize "cc -show" or "cc -link_info".

I tried disabling the shift modules with "#SBATCH --module=none" in the

job script, but it makes no difference:

https://docs.nersc.gov/development/shifter/how-to-use/#shifter-modules

Removing the "--enable-statc" flag in Makefile.pfft also makes no

difference.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTCIBJRVNJ2OAV722M3WVLDMVANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Here is the output of "objdump -t -T fastpm" (it's too long so I put it in a text file): Is there a way to check whether fastpm and mpsort agrees on mpi rank? For pfft, it returns its communicator Comm2D so I can check the pfft rank values in fastpm. For mpsort does it also have a similar MPI communicator in fastpm? |

|

Hmm. the Comm2D is constructed from a COMM, so could it be we had some

issues creating the Comm2D?

…On Wed, Feb 1, 2023 at 6:06 PM Biwei Dai ***@***.***> wrote:

Here is the output of "objdump -t -T fastpm" (it's too long so I put it in

a text file):

output.txt <https://github.com/fastpm/fastpm/files/10564109/output.txt>

Is there a way to check whether fastpm and mpsort agrees on mpi rank? For

pfft, it returns its communicator Comm2D so I can check the pfft rank

values in fastpm. For mpsort does it also have a similar MPI communicator

in fastpm?

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTHZDPOO2YX3UEFBNS3WVMJCJANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

Maybe out reproducer could be simply calling MPI_Cart_create and then

querying MPI_get_rank?

…On Wed, Feb 1, 2023 at 6:35 PM Feng Yu ***@***.***> wrote:

Hmm. the Comm2D is constructed from a COMM, so could it be we had some

issues creating the Comm2D?

On Wed, Feb 1, 2023 at 6:06 PM Biwei Dai ***@***.***> wrote:

> Here is the output of "objdump -t -T fastpm" (it's too long so I put it

> in a text file):

>

> output.txt <https://github.com/fastpm/fastpm/files/10564109/output.txt>

>

> Is there a way to check whether fastpm and mpsort agrees on mpi rank? For

> pfft, it returns its communicator Comm2D so I can check the pfft rank

> values in fastpm. For mpsort does it also have a similar MPI communicator

> in fastpm?

>

> —

> Reply to this email directly, view it on GitHub

> <#112 (comment)>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/AABBWTHZDPOO2YX3UEFBNS3WVMJCJANCNFSM6AAAAAAUIFPAQU>

> .

> You are receiving this because you commented.Message ID:

> ***@***.***>

>

|

|

… On Wed, Feb 1, 2023 at 6:38 PM Feng Yu ***@***.***> wrote:

Maybe out reproducer could be simply calling MPI_Cart_create and then

querying MPI_get_rank?

On Wed, Feb 1, 2023 at 6:35 PM Feng Yu ***@***.***> wrote:

> Hmm. the Comm2D is constructed from a COMM, so could it be we had some

> issues creating the Comm2D?

>

>

> On Wed, Feb 1, 2023 at 6:06 PM Biwei Dai ***@***.***>

> wrote:

>

>> Here is the output of "objdump -t -T fastpm" (it's too long so I put it

>> in a text file):

>>

>> output.txt <https://github.com/fastpm/fastpm/files/10564109/output.txt>

>>

>> Is there a way to check whether fastpm and mpsort agrees on mpi rank?

>> For pfft, it returns its communicator Comm2D so I can check the pfft rank

>> values in fastpm. For mpsort does it also have a similar MPI communicator

>> in fastpm?

>>

>> —

>> Reply to this email directly, view it on GitHub

>> <#112 (comment)>,

>> or unsubscribe

>> <https://github.com/notifications/unsubscribe-auth/AABBWTHZDPOO2YX3UEFBNS3WVMJCJANCNFSM6AAAAAAUIFPAQU>

>> .

>> You are receiving this because you commented.Message ID:

>> ***@***.***>

>>

>

|

|

What if, we replace ThisTask with the result you get from Comm2d?

…On Wed, Feb 1, 2023 at 6:41 PM Feng Yu ***@***.***> wrote:

https://github.com/mpip/pfft/blob/e4cfcf9902d0ef82cb49ec722040932b6b598c71/kernel/procmesh.c#L59

On Wed, Feb 1, 2023 at 6:38 PM Feng Yu ***@***.***> wrote:

> Maybe out reproducer could be simply calling MPI_Cart_create and then

> querying MPI_get_rank?

>

> On Wed, Feb 1, 2023 at 6:35 PM Feng Yu ***@***.***> wrote:

>

>> Hmm. the Comm2D is constructed from a COMM, so could it be we had some

>> issues creating the Comm2D?

>>

>>

>> On Wed, Feb 1, 2023 at 6:06 PM Biwei Dai ***@***.***>

>> wrote:

>>

>>> Here is the output of "objdump -t -T fastpm" (it's too long so I put it

>>> in a text file):

>>>

>>> output.txt <https://github.com/fastpm/fastpm/files/10564109/output.txt>

>>>

>>> Is there a way to check whether fastpm and mpsort agrees on mpi rank?

>>> For pfft, it returns its communicator Comm2D so I can check the pfft rank

>>> values in fastpm. For mpsort does it also have a similar MPI communicator

>>> in fastpm?

>>>

>>> —

>>> Reply to this email directly, view it on GitHub

>>> <#112 (comment)>,

>>> or unsubscribe

>>> <https://github.com/notifications/unsubscribe-auth/AABBWTHZDPOO2YX3UEFBNS3WVMJCJANCNFSM6AAAAAAUIFPAQU>

>>> .

>>> You are receiving this because you commented.Message ID:

>>> ***@***.***>

>>>

>>

|

|

I think we got to the bottom of the problem.

We've set reorder = 1, such that the new MPI implementaiton on Permutter

decided when there are multiple nodes, it is more efficient to reshuffle

the ranks (assuming some communication patterns).

https://github.com/mpip/pfft/blob/e4cfcf9902d0ef82cb49ec722040932b6b598c71/kernel/procmesh.c#L58

I think an easier fix is to make a copy of this function inside fastpm, and

pass in recorder=0, when calling MPI_Create_Cart, then use that Comm2D all

around. I think most of FastPM makes the assumption that the ranks are not

reordered.

It may be a bit difficult to audit the code and fix all of them (basically

ensure the original comm is never used, but only Comm2D is sent around, or

something along those lines).

…On Wed, Feb 1, 2023 at 6:44 PM Feng Yu ***@***.***> wrote:

What if, we replace ThisTask with the result you get from Comm2d?

On Wed, Feb 1, 2023 at 6:41 PM Feng Yu ***@***.***> wrote:

>

> https://github.com/mpip/pfft/blob/e4cfcf9902d0ef82cb49ec722040932b6b598c71/kernel/procmesh.c#L59

>

> On Wed, Feb 1, 2023 at 6:38 PM Feng Yu ***@***.***> wrote:

>

>> Maybe out reproducer could be simply calling MPI_Cart_create and then

>> querying MPI_get_rank?

>>

>> On Wed, Feb 1, 2023 at 6:35 PM Feng Yu ***@***.***> wrote:

>>

>>> Hmm. the Comm2D is constructed from a COMM, so could it be we had some

>>> issues creating the Comm2D?

>>>

>>>

>>> On Wed, Feb 1, 2023 at 6:06 PM Biwei Dai ***@***.***>

>>> wrote:

>>>

>>>> Here is the output of "objdump -t -T fastpm" (it's too long so I put

>>>> it in a text file):

>>>>

>>>> output.txt

>>>> <https://github.com/fastpm/fastpm/files/10564109/output.txt>

>>>>

>>>> Is there a way to check whether fastpm and mpsort agrees on mpi rank?

>>>> For pfft, it returns its communicator Comm2D so I can check the pfft rank

>>>> values in fastpm. For mpsort does it also have a similar MPI communicator

>>>> in fastpm?

>>>>

>>>> —

>>>> Reply to this email directly, view it on GitHub

>>>> <#112 (comment)>,

>>>> or unsubscribe

>>>> <https://github.com/notifications/unsubscribe-auth/AABBWTHZDPOO2YX3UEFBNS3WVMJCJANCNFSM6AAAAAAUIFPAQU>

>>>> .

>>>> You are receiving this because you commented.Message ID:

>>>> ***@***.***>

>>>>

>>>

|

|

I see! So fastpm and pfft are using the same mpi library, but pfft reorders the ranks so it's no longer consistent with fastpm. I wonder if this reorder depends on mesh size? For example, particle mesh and force mesh may have different resolutions. Do they give the same Comm2D? If yes, then we can call pfft_create_procmesh at the beginning of fastpm, and use the returned comm2d? Or can we replace pfft_create_procmesh with MPI_Cart_create and set reorder=0? |

|

On Wed, Feb 1, 2023 at 7:01 PM Biwei Dai ***@***.***> wrote:

I see! So fastpm and pfft are using the same mpi library, but pfft

reorders the ranks so it's no longer consistent with fastpm.

I wonder if this reorder depends on mesh size? For example, particle mesh

and force mesh may have different resolutions. Do they give the same Comm2D?

It depends on the number of ranks and the hardware topology, but won't

depend on the mesh size in FastPM. (all array meshes use the same Comm2D).

If yes, then we can call pfft_create_procmesh at the beginning of fastpm,

and use the returned comm2d?

I quickly searched all uses of MPI_get_rank in fastpm repository. I think

we'll need a way to ensure all of these receive the comm2d instead of the

original comm passed to fastpm_init(). This may not be easy to do based on

a quick scan of the search result.

Or can we replace pfft_create_procmesh with MPI_Cart_create and set

reorder=0?

Yes. This may be easier. It is unclear if the reorder does actually bring

performance improvements to FastPM. -- but without doing the first fix we

won't be able to tell.

So both are valid approaches, and approach 1 may be harder than approach 2.

… —

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTCMKDULY4WADMXXSCTWVMPSPANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

|

For approach 2, simply replacing pfft_create_procmesh with

fix the problem. Does pfft_create_procmesh do anything more than the above two lines? Do you want me to make a pull request on this? Or do you want to try approach 1 and compare the performance first? |

|

Ah I was wrong about approach 1. It only changes the CPU - MPI rank mapping, and doesn't change the MPI rank - domain mapping. Replacing all the fastpm comm with comm2d should be enough. |

|

Feel free to file PRs.

Sounds like you are on track doing both. I am curious about the performance

impact, especially if there is a large number of nodes. Perhaps do both and

leave reorder as a parameter, then we can do some benchmarking.

…On Wed, Feb 1, 2023 at 10:23 PM Biwei Dai ***@***.***> wrote:

Ah I was wrong about approach 1. It only changes the CPU - MPI rank

mapping, and doesn't change the MPI rank - domain mapping. Replacing all

the fastpm comm with comm2d should be enough.

—

Reply to this email directly, view it on GitHub

<#112 (comment)>, or

unsubscribe

<https://github.com/notifications/unsubscribe-auth/AABBWTA42MUYJZ56NCC4QKTWVNHFPANCNFSM6AAAAAAUIFPAQU>

.

You are receiving this because you commented.Message ID:

***@***.***>

|

Hi,

I run FastPM with two nodes (256 MPI tasks) on Nersc Perlmutter, but got the following results at z=0:

I got this strange structure at top left and bottom right. We can also see the grid corresponds to different MPI tasks?

This only happens with multiple nodes. It works well with a single node (128 MPI tasks) on Perlmutter:

This is the linear density field generated with two nodes, which looks fine to me:

The IC is generated at z=9 with 2LPT. This is the snapshot at z=9:

The code is compiled with Makefile.local.example. I am not sure whether I compile it correctly (there are some warnings but no errors during compilation)?

The text was updated successfully, but these errors were encountered: