-

Notifications

You must be signed in to change notification settings - Fork 2.9k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Training Detectnet_v2 on Jetson AGX Orin #1570

Comments

|

up! |

|

Hi @ABDELRAHMAN123491, detectnet_v2 is only trained with TAO and TAO supports x86, so it would need to be done on a PC/server that has a discrete NVIDIA GPU. The old detectnet_v1 architecture is outdated and shouldn't be used for new models and was trained with DIGITS and I haven't used that in years. |

Thanks for the valuable information! I meant by detectnet_v1:= the SSD mobilenet model - sorry for the confusion - which you have elegantly incorporated in this work for custom data training. Does it support training on jetson orin? and if not, could you provide me with hints to train detectnet_v2? I am really grateful for the amazing work you done here! I was able to use the gstreamer with .pt yolo models and the latency is not noticeable at all! I may add this feature to this repo if you permit, which, I believe, can help Yolo users to get rid of opencv, have some stability in viewing the detections, and achieve very low latency. Thanks @dusty-nv |

Oh yes it does, through the same train_ssd.py script using PyTorch, it runs on Jetson Orin just the same as before.

That would be great, which version of YOLO are you have working? |

Oh that's great. Are you using TensorRT like jetson-inference does to run the YOLO inferencing, or PyTorch? |

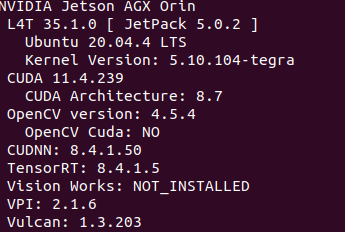

Actually, I am using Pytorch. The conversion from yolo to TensorRT, and then carrying out the inference using Python is something that I am working on. There are multiple issues that I encountered through the steps of running TensorRT yolo-based models. For instance, the conversion on Jetson Orin with the JetPack that I have seems to lack support and inferring the “.engine” is not straightforward as well. |

|

I attempted to use the export.py file, available in the yolov5 repository, to convert to .engine; however, It yields the following error: Expected all tensors to be on the same device, but found at least two devices... |

|

Typically that means that some tensor is on CPU and some on GPU (i.e. |

The intriguing thing is that I explicitly defined the device parameter and made sure that it runs only on GPU. Have you come across the export.py that is available in the yolov5 repository before? They provide conversion to wide range of extensions including .engine. The key thing is that using their script shall ease the inference part, as you can use directly torch.hub.load to load the TensorRt model and use it easily in the code as any normal yolo model. They support conversions to FP32 and FP16 precisions. This shall make the detections faster 5x. So, if you could give export.py a shot and examine the conversions to .engine model, that would be awesome. Besides, here is my personal email: abdulrhman123491@gmail.com for easier future communication. I am excited to add to this work, and share with you the script of supporting running Yolo models using Pytorch until we find a way to support the .engine extensions. Thanks @dusty-nv |

|

@dusty-nv You can check a "native" Yolo integration in jetson-inference in my fork here. It includes:

I would be happy to figure out a way how we can upstream these changes. My first attempt was this PR. I have some other ideas like having a separate |

Hi @ligaz, |

|

Yolo v7 tiny runs at 55-60ms on the Jetson Nano. Although the method name is yolov7 those changes will work with any model that uses efficientNMSPlugin TensorRT plugin including yolo v5, v7 and v8. You can check this repo as a reference how you can export a YOLO model with efficientNMSPlugin. |

|

Hi @ligaz. |

Hi @dusty-nv,

Thanks for the continuous effort that you put in upgrading this repository. Recently, I was able to convert the peoplenet model based on detectnet_v2 to .engine extension and run the inference on Jetson AGX Orin using detectnet.py thanks to your hints. Now, I want to customize the model to detect new classes, such as PPEs (helmet, gloves, etc). So, is it possible to train such model using the functions you wrote for transfer learning? Or better train it using TAO toolkit, then convert to .engine and run the inference? What about retraining detectnet_v1, which is supported from the very beginning in this work? Can I retrain the network on Orin device with JetPack 5.0.1. If yes, then do I need to Swap some space for training?

Thanks in Advance!

Please keep up the amazing work!

The text was updated successfully, but these errors were encountered: