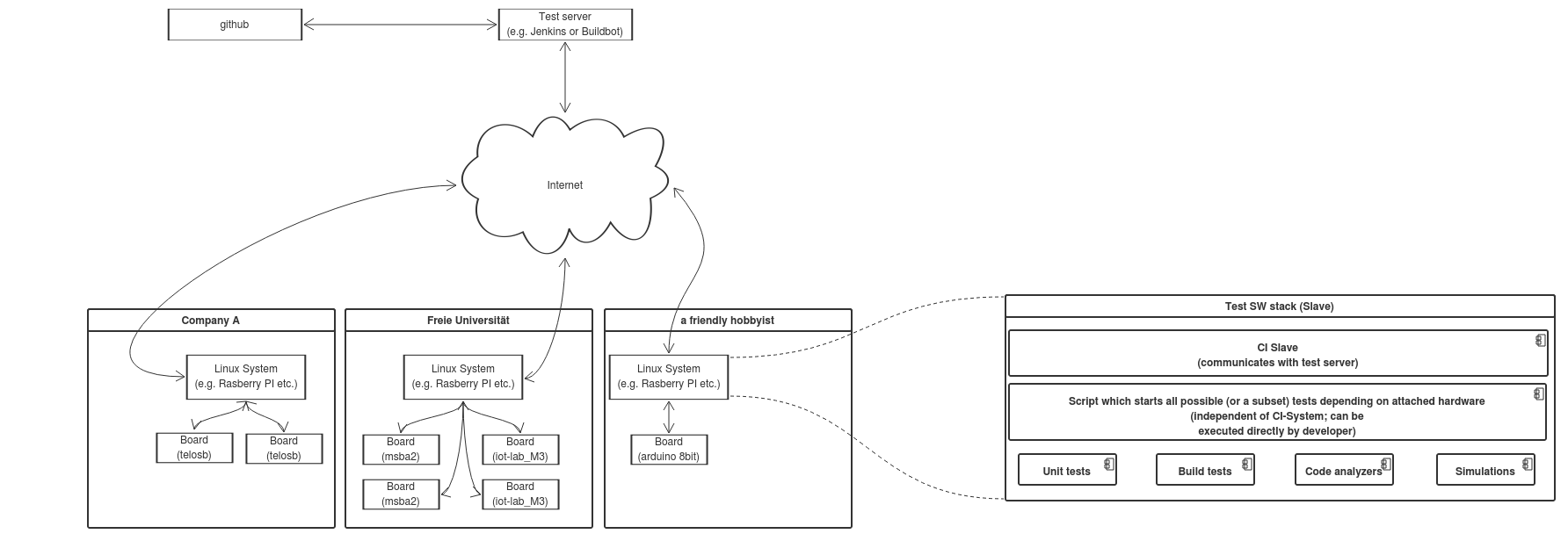

RIOT's new test system: overview of the proposed architecture

This page describes a proposal for a new test architecture which should be able to tackle the challenges presented in requirements and challenges. Each component of the architecture is described briefly on this page.

The following image represents a rough sketch of the system. You might have to

to zoom in to read the text.

The fundamental idea behind this architecture is to make the test system distributed. RIOT's maintainers control the master CI-server which interfaces with github (or a similar systems) and all CI slaves.

Therefore the system works as follows: As soon as a test run is triggered by a developer through github the master CI server initiates a test run on all relevant CI slaves. During a test run a slave starts a test suite which consists of (potentially) many different types of tests (e.g.: unit tests, hardware based acceptance tests, build tests, code quality tests etc.).

Provides a web interface on which build logs are displayed, communicates with slaves and interacts with developers trough github.

The slaves run on systems (read: some linux system with or without boards attached to it) which are located at various locations / institutions. They should be as easy to setup as possible. It should be possible to easily configure what kind of tests this slave is able to execute.

The testsuite running on a slave consists of various different types of tests (or sub test suites). It should be designed so that it can operate independently of the CI system. Also it should be possible for a developer to manually execute the test suite or a sub test suite.

- unit-tests: as per definition unit-tests check small portions of code (usually functions) for pre-determined potential defects. Prime candidates for unit testing are API functions of modules which are not or only slightly hardware-dependent (e.g.: most of the core and sys modules).

- hardware based acceptance tests: tests which can only be run on real hardware (e.g.: transceiver driver, GPIO, sensors etc.). Those tests are mostly intended to be black-box acceptance tests. For a transceiver driver test this would mean that a test should exist which checks whether two boards with the same transceiver can send/receive data to/from each other.

- simulations: for network-related tests, like routing protocol implementation tests, a network simulation may make sense to detect subtle defects which do not manifest in small scale tests.

- large scale hardware based tests: ideally, it should be possible to run tests on real hardware at a larger scale. For this purpose various IoT testbeds could be utilized. However, it is probably not possible to block an entire testbed for an infinite amount of time for RIOT's testing system. It may make sense to use IoT testbeds for automated weekly/monthly large scale hardware based tests.

- build tests: all applications and all tests should be able to build at all times for all boards if not explicitly stated otherwise (e.g.: a Makefile blacklist or something similar). This should be ensured by build only tests. Currently, we are using Travis for this.

- code quality tests:

- static code analyzers should check the code for defects that can be determined at compile-time.

- coding-standard enforcement tools can be used. However, since those tools often struggle with complex C / preprocessor constructs, they should only be allowed to return warnings but not errors.